Double-edged tech:

Advanced AI & compute as dual-use technologies

Introduction

Advanced AI¹ is set to assist humanity with countless tasks; however, its ability to serve military purposes has been raising significant concerns within legal and policy analysis circles. Future AI capabilities might lower the barrier for developing biological or chemical weapons or sophisticated cyberattacks by motivated malicious actors. This brief aims to summarise the current debate around the dual-use nature of advanced AI systems.

The first theme to note is a lack of clarity on this issue. Dual-use related risks are recognized in The Bletchley Declaration, the US Executive Order on AI, and the EU AI Act, however, there is no multilateral consensus on whether advanced AI is even considered a dual-use technology let alone what robust governance framework could mitigate these risks at a global scale. While there are existing legal frameworks tailored for some of these risk areas, such as non-proliferation agreements on chemical or biological weapons, it is unclear whether these frameworks could assess the capabilities of a sophisticated general-purpose technology like advanced AI and how they could cooperate with existing AI regulatory bodies.

Developing advanced AI requires physical compute resources that are particular to advanced AI development², so that could offer an alternative dual-use framework rather than regulating a sophisticated software technology, which is inherently difficult. However, an effective framework that identifies a risky accumulation or unauthorised use of these compute resources is not fully possible today and requires more hardware R&D. As of today, the only existing dual-use framework on advanced AI compute resources is the unilateral export ban on advanced AI chips that the US has imposed against China³ since October 2022, which is not a multilateral framework that prioritises global risk mitigation. The G7 has founded an international coordination group on semiconductor supply, however, the priorities and the goals of this organisation are yet to be announced. Overall, it is unclear whether dual-use framing of hardware resources would be effective in mitigating dual-use risks of advanced AI or how a multilateral consensus on the governance of these resources could be established.

The rapid development and adoption of advanced AI and the current fragmented regulatory landscape present an urgent need for a multilateral, proactive and continuous dialogue on dual-use aspects of this technology, along with the establishment of necessary frameworks to mitigate its emerging risks.

This brief aims to provide a comprehensive assessment of, first, why advanced AI and the compute resources used in its development might be considered dual-use and, second, the open questions that need to be resolved for the development of any effective dual-use framework on this topic.

¹ We define advanced AI as highly capable, general-purpose frontier AI models and systems.

² See Compute as a dual-use technology section

³ The most up-to-date version of the relevant export restrictions imposed by the US target a broader list of countries, especially to avoid those countries providing advanced AI chips to China.

Defining “Dual-use technologies”

Dual-use technology refers to items, including software and technology, that can be used for both civilian and military purposes. This typically includes items that can be used for the design, development and production of nuclear, chemical, or biological weapons, as well as other weapons of mass destruction. In order to evaluate the dual-use framing of advanced AI through the lens of established dual-use frameworks, we adopt this definition based on the Wassenaar Arrangement, recognized by 42 countries as one of the most widely accepted international agreements on dual-use technologies. However, we observe nuances in the definition of “dual use” amongst different regulatory frameworks and organisations, which might change the scope of such frameworks fundamentally. For example, an internal US regulation on pathogen research uses a broader definition, referring to research that might impact public health and safety, agricultural crops, animals, the environment or national security as “dual-use research.” As rapidly evolving technologies like AI find new and diverse application fields, this lack of consensus on the definition of dual-use technologies might make it more challenging to establish a consensus on the dual-use aspects of this technology, especially at the international level.

Several multilateral or national mechanisms are in place to control dual-use technologies, which include treaties, conventions, bodies and export controls that focus on non-proliferation, global trade and oversight of these technologies. International treaties and conventions mostly set broader rules and frameworks that aim to prohibit the development, production, acquisition, stockpiling, or use of relevant weapon technologies and to promote transparency and confidence-building measures among member states. Notable examples of such treaties include the Treaty on the Non-Proliferation of Nuclear Weapons (NPT) and the Biological Weapons Convention (BWC). Prominent multilateral bodies that enforce such frameworks include the International Atomic Energy Agency (IAEA) and the Organization for the Prohibition of Chemical Weapons (OPCW)

Given the historical dependence of weapon development on physical items, several frameworks have been established to tightly control the export of dual-use items and prevent their misuse. National and international regulations require exporters to obtain licences, ensuring that these technologies are not diverted to unauthorised or hostile entities. Determining and updating the list of items subject to export controls are managed through various agreements and bodies, such as the Wassenaar Arrangement or the sanctions issued by the UN Security Council at the international level. There are also unilateral controls that are recognized at the national level, such as The United States Export Control Reform Act (ECRA) of 2018 or by a certain coalition of countrie,s such as The European Union Regulation 2021/821. These frameworks collectively work to prevent the misuse of dual-use items while allowing for legitimate civilian applications.

Although the majority of the rules in these frameworks apply to physical goods, the Wassenaar Arrangement and the EU Regulation 2021/821 also cover software technologies. They address the software that is specifically used for the development of weapon systems while keeping the software that is “generally available to the public” out of scope. This raises questions on how these frameworks would classify general purpose technologies like advanced AI.

Advanced AI as a dual-use technology

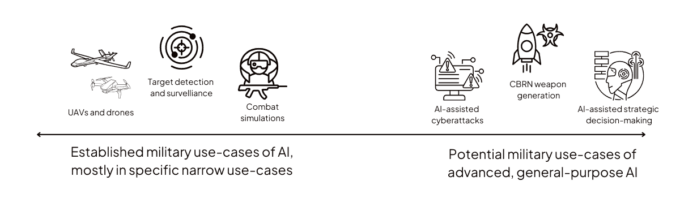

AI has been used for specific military applications over the past decade, such as unmanned aerial vehicles. There have also been smaller, specialised AI models that can be used for a specific military use case, such as biological weapon development. While these AI models have a defined and limited capability in these specific military use cases, advanced AI offers vast capabilities that we are yet to witness, including fostering weapon development, that will be available to a wide array of unmonitored actors at reduced costs especially if these models are free to access, vulnerable to model theft via cyber attacks or circumvention of existing model safety precautions.

There is limited empirical evidence suggesting that current advanced AI models possess capabilities that can exacerbate dual-use risks significantly. However, the growing capabilities of advanced AI indicate that future models could facilitate biological weapon development, automated cyber-attacks, and scientific development that can be used for the generation or optimization of weapon systems. The current advanced AI developers, including OpenAI and Anthropic, have publicly claimed that they are trying to prevent their models being used for these purposes; however, a malicious actor or nation state might still intentionally build a model with such capabilities, repurpose an existing open source model or even hijack a closed model especially in the presence of security vulnerabilities. Existing safety tests also can not detect some risky capabilities that can emerge after the tests are being done. Finally, even if there were efficient safety tests, advanced AI developers are not subject to a global mandate to follow them and might apply these tests haphazardly in order to be the first one in the market to deploy new models.

As of today, Advanced AI models are not explicitly classified as dual-use in widely accepted multilateral frameworks captured in the Dual-use technologies section. Several frameworks such as Australia Group, Nuclear Suppliers Group, Missile Technology Control Regime, Strategy against the Proliferation of WMDs of the EU and the Catalogue of Technologies Prohibited and Restricted from Export of China classify “software used for weapon development” as dual-use. This might indirectly encompass advanced AI models; however, it does not provide explicit specifications to monitor advanced AI models.

In the advanced AI regulatory landscape, there is inconsistency among major regulations regarding the dual-use classification of advanced AI models.

- The US Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence refers to general-purpose AI models as “dual-use foundational models” based on their potential capabilities of bio-weapon development, software vulnerability exploitation, public influence and deception, and self-replication.⁴

- By contrast, the EU AI Act does not use the term “dual-use” to describe AI models. The EU AI Act covers some of the risk factors considered dual-use by the US, such as public influence and deception or general risks to citizens’ health and safety. However, it does not explicitly address the potential military use cases such as weapon generation.

- At the international level, the Seoul Ministerial Statement from the second AI Safety Summit suggests that the weapon generation capabilities of advanced AI should comply with international laws such as the Chemical Weapons Convention, the Biological and Toxin Weapons Convention, and UN Security Council Resolution 1540.

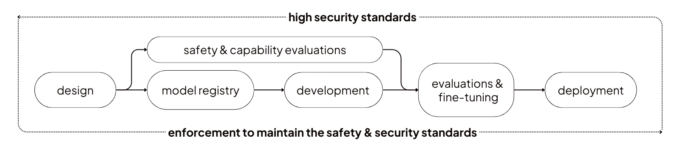

Given that the dual-use risks are directly linked to AI model capabilities, assessing these capabilities is crucial. Current evaluations, however, are often limited to pre-deployment stages and lack internationally accepted standards, highlighting the need for rigorous security measures that are not yet enforced.

To address these challenges, a recent study done by the Institute for AI Policy and Strategy proposes an end-to-end governance framework involving model capability assessors, defenders against dual-use capabilities, and a coordinating body to report findings. This framework, designed for the US government but could be adopted by other actors, also underscores the lack of standard terminologies and robust capability assessments throughout the entire AI lifecycle, as well as other limitations that make the operationalization of this framework more challenging, such as lack of clarity on what organisations should own the risk areas across different stages of the framework. There are other governance proposals stemming from national security and dual-use aspects, such as RAND’s research on securing model weights and avoiding the open sourcing of advanced AI models. Although they provide promising solutions, such partial frameworks do not entirely mitigate the dual-use risks, because they either focus on one specific risk area or are not based on a multilateral setting that can address these risks on a global scale.

Given these complexities, other measures, such as export controls on physical hardware resources used in AI training, are being considered as potential means to monitor and regulate advanced AI development.

⁴ The dual-use reference for AI is also adopted by the US Bureau of Industry and Security.

Compute as a dual-use technology

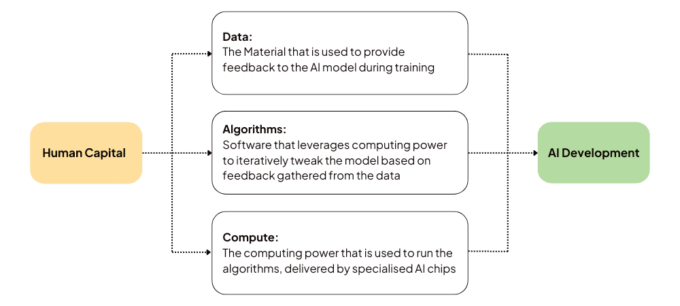

The key inputs for developing advanced AI, demonstrated in Figure 3, are data, algorithms, and compute. Data, algorithms, and advanced AI models, as intangible software goods, are effortlessly shareable and challenging to control, whereas compute, being a physical resource, is easier to trace and manage. Current advanced AI models are trained on energy-intensive, high-cost, large data centres consisting of thousands of sophisticated AI chips connected in clusters with special networking equipment. Under selective export controls, as a potential dual-use framework, the accumulation of these extensive resources might be practically detectable, as outlined in-depth in a previous CFG report.

AI developers can have their own computing clusters with individual components—chips, supercomputers, servers etc.—typically sourced from different providers. But AI developers don’t have to own their clusters. They can also access resources remotely through cloud compute providers, services that own the physical clusters and offer them on a pay-per-use basis or rent a certain amount of compute for an agreed-upon timeframe. Most famously, OpenAI uses Microsoft’s cloud computing services to train their advanced AI models as part of their strategic partnership.

Similar to advanced AI itself, compute resources are not explicitly classified as dual-use items in widely accepted multilateral frameworks. The Wassenaar Arrangement, an international dual-use classification of a wide variety of physical items, includes controls on lithography equipment which is used for chip development. However, this likely targets the chips that are implemented directly in the weapon systems, not advanced AI chip manufacturing.

There are unilateral export controls on advanced AI compute resources but these fall far short of an international dual-use framework. The US has been imposing an export ban on certain compute resources⁵ used for training advanced AI against China since October 2022. In 2023, Japan and The Netherlands joined the US for restrictions against China as two other countries supplying high-performance semiconductor manufacturing equipment in the world. These do not stem from international agreements on dual-use risk mitigation, but rather bilateral security concerns between the US and China. As a response to these measures, China restricted the export of rare earth materials in 2023 as a tit-for-tat retaliation to the US’ export bans.

The proposal of using compute as a governance mechanism for advanced AI has also been attracting increasing interest in the research community. However, there are challenges and concerns in effectively controlling compute as a dual-use technology, which will be discussed in the upcoming section.

⁵ In summary, the restriction applies to certain high-end chips and chip manufacturing devices that can produce the most advanced AI chips, and software that is specialised for designing such chips. For more details, see this official statement and this commentary.

Open Questions

While there are many questions to consider on the dual-use framing of advanced AI and compute, we highlighted a few that we believe are more fundamental and precedent to answer other questions.

Is dual-use governance sufficient to address the scope of Advanced AI?

Dual-use frameworks aim to identify specific use-cases of a certain technology, which is inherently difficult for general-purpose AI given that even testing for its full capabilities and potential risks is challenging. Governments have been forming dedicated organisations with special expertise to evaluate capabilities and risks of advanced AI, execute existing AI regulations or advise on appropriate governance frameworks, such as US AISI, UK AISI or the EU AI Office. Unlike these dedicated organisations, regulatory bodies for existing dual-use frameworks might not have the technical expertise to detect general capabilities that can be utilised across different domains, such as facilitating scientific research for both biological and chemical weapon development or designing sophisticated cyber attacks. Moreover, it is also unclear how the scope of a potential dual-use framework on advanced AI and existing AI regulations would be identified. For example, OPCW is responsible for preventing the proliferation of chemical weapons, regardless of whether the process is assisted by AI or not. If OPCW could detect such advanced AI capabilities, it still remains unclear whether OPCW or existing AI regulatory bodies would be the first one to take action, and how. Therefore, mitigating dual-use risks of advanced AI might require more robust governance policies than existing dual-use frameworks, enabling collaboration across AI institutions and existing dual-use governance bodies and defining clear responsibilities to them.

What institution is best suited for the dialogue on dual-use advanced AI framing?

Dual-use frameworks need to be multilateral to achieve global risk mitigation. In the current fragmented AI regulatory landscape, determining the appropriate multilateral forum to host the dialogue on the dual-use framing of advanced AI is crucial to maintain the sustainability of this dialogue and turn its potential outcomes into action.

Existing dual-use frameworks have different establishment motives and structures. Biological Weapon convention and OCWP were started through the UN, while the Australia Group is an informal commitment initiated and mainly coordinated by Australia. The Wassenaar Arrangement evolved from a multilateral export control body active during the Cold War era. Similar to these examples, there are multiple forums where dual-use dialogue can start in the AI regulatory landscape.

The UN founded the High Level Advisory Board on AI in late 2023 to advise a compact that is planned to be presented to its member states in September 2024 which underscores the importance of international cooperation in AI governance, but does not present an action plan, especially on dual-use aspects. The G7 released Hiroshima AI Process Principles on Advanced AI Governance in 2023 and had the AI topic as one of the top agenda items at their latest summit in 2024. At this summit, G7 also founded a new international coordination group on semiconductor supply chain, however this group’s priorities and action plan are yet to be announced.

Another multilateral effort in advanced AI governance is the AI Safety Summit series, initiated by the UK government, which started in November 2023 with participation of 28 countries including the US, China and the EU. At the latest summit in May 2024, leading advanced AI labs pledged voluntary commitments to develop AI safety and risk frameworks to be published by the next summit to take place in February 2025 in Paris, under the name of AI Action Summit. Even though the summit series provides a venue for continuous dialogue, it is unclear whether a more binding action, such as a treaty that might encompass dual-use cases of advanced AI, will ever be on the agenda of the summit series.

Organisations like the UN and the G7 have extensive experience in establishing international agreements, including topics that involve military and security considerations. The AI Safety Summit series on the other hand has been establishing close ties with the industry and might be able to drive the technical and practical aspects of AI safety and risk management. To maximise impact, a synchronised approach across these organisations might enable them to use their resources and expertise more efficiently.

Are traditional export controls suitable for an effective monitoring of compute resources?

A common dual-use framework is to control the export of physical items that are used in weapon development. However, applying this for a general purpose technology requires a more holistic monitoring framework in order to target only the risky utilisation of these resources.

Advanced AI development requires numerous sophisticated chips, namely advanced CPUs and parallel-processing chips that are commonly named GPUs⁶, interconnected in large clusters. There is a vast variety of CPUs and GPUs for consumer products, however, the latest chips used in advanced AI training are specifically designed for this purpose by their developers, which leads to a growing interest in monitoring such chips for advanced AI governance.

Even though these chips are critical for advanced AI training, considering each single chip a dual-use technology may be an overreaction – not all clusters of GPUs are necessarily a security concern. For example, several universities in the US announced holding 100-300 NVIDIA H100 GPUs, one of the most highly-demanded GPUs in the market for AI development, for academic research purposes. This also applies to other components of large compute clusters, such as Inifinband, the networking technology implemented in many data centers used for AI training today, which is also utilized in enterprise databases for fast data transfers.

An effective dual-use framework would involve monitoring the accumulation and exact use case of these chips remotely, rather than traditional export controls. If the location, use case, and the user of these chips could be verified throughout the lifetime of the chip and the chips can be controlled, i.e. deactivated, remotely, it would be feasible to detect an unauthorized extensive utilization of these chips without restricting the movement of individual chips. There are existing technical proposals to enhance chips with mechanisms like location verification of the chip ongoingly and switching chips off remotely. However, these mechanisms are currently not available in existing chips and require significant financial investment and development time, which may leave the traditional export controls as the only viable option to mitigate dual-use risks of advanced AI in the near future – but it is important to stress that such an approach is sub-optimal.

⁶ In advanced AI training within exascale clusters, Central Processing Units (CPUs) manage general task execution and control operations. Graphics Processing Units (GPUs) perform the bulk of the essential AI training by handling massive parallel computations. CPUs and GPUs are commonly referred terms in the industry. As an exception, Google refers to their specialized AI accelerators as Tensor Processing Unit (TPU).

Will compute continue to be an efficient indication of advanced AI capabilities?

Even if mechanisms for monitoring and controlling chips remotely are in place, the rapid advancements in AI development might still make compute an insufficient proxy for advanced AI capabilities and risks. An important consideration is that advanced AI might be developed via a decentralized compute infrastructure⁷ rather than via co-located chips in data centers. It is unclear whether decentralized compute would be efficient for advanced AI training in the future; however, if the hardware and software advancements in chip communication enable it, compute infrastructure for advanced AI training would become significantly more complex, making any potential dual-use framework potentially inapplicable. Another consideration is that as other inputs of AI models, such as algorithms and training data, continue to improve, risky capabilities could be achieved with fewer compute resources. This could make current dual-use risk thresholds obsolete over time. While periodically updating these thresholds could be a solution, it is unclear whether the pace of such reviews would match the rapid developments in AI.

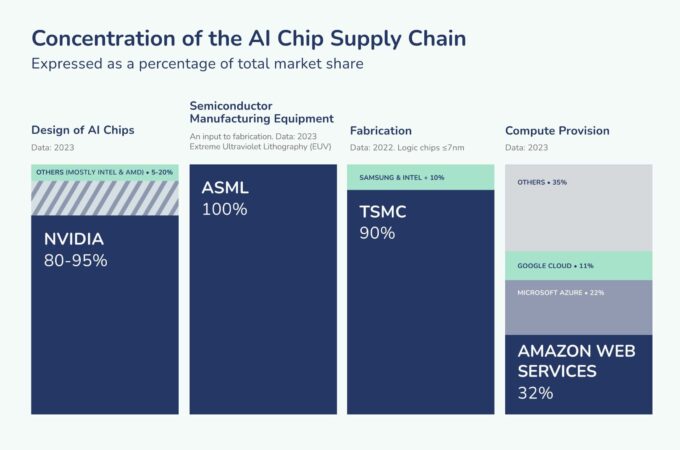

Do we need a multilateral compute governance framework?

As outlined in Compute as a dual-use technology section, existing export controls on advanced AI compute resources are mainly between China and the US, driven by economy and national security motivations. Such a tit-for-tat exchange is far from a multilateral agreement with the objective of mitigating dual-use advanced AI risks. On the other hand, the supply chain of compute resources used in advanced AI training is currently highly concentrated⁸. As seen in Figure 4, only a few countries, namely the US, China, Taiwan, Japan and the Netherlands have a significant part of the compute supply chain. This raises the question of whether multilateral agreements on these resources would be as impactful as a series of unilateral measures taken by leading AI actors.

However, the impact of unilateral measures would inevitably be global, which might prioritise this topic on the agenda of international forums. For instance, in their 2024 whitepaper on export controls, the EU highlighted the US’ and the Netherlands’ sanctions against China, recommending increased coordination on export control frameworks. This underscores the need for a cohesive and collaborative approach to managing the export of dual-use technologies. As previously mentioned, the new semiconductor coordination group formed by the G7 just in June 2024 might undertake the responsibility of establishing this approach, which is yet to be determined and publicly shared.

⁸ For example, the lithography machines that are necessary for the state-of-the-art AI chips are currently supplied only through ASML, a Netherlands-based company. The rest of the supply chain is also up to 90% concentrated to a single actor or a country. For an overview, see section 3.B.4 Supply Chain Concentration in Computing Power and the Governance of Artificial Intelligence.

Conclusion

Advanced AI’s general purpose capabilities hold a significant promise for humanity, but some of those capabilities might be utilised for CBRN weapon development, developing cyberattacks and military decision making. Technologies that enable both civilian and military applications are recognized as dual-use and are subject to regulations set by international treaties and organisations, however, there is no multilateral consensus on whether advanced AI is a dual-use technology or how existing AI regulatory landscape and dual-use frameworks could collaborate on addressing these risks.

AI use in military applications is not new, but as a general purpose technology, advanced AI particularly requires a robust governance framework that targets specifically its harmful applications and does not prevent its beneficial applications. An effective governance mechanism to mitigate these risks should be as robust as the technology itself, including capability and risk identification throughout the entire AI life cycle, relevant reporting and response structure in place and a security framework that prevents unauthorised access to these models. Existing dual-use frameworks, such as non-proliferation treaties, may not have the technical expertise to develop a tailored governance framework for this complex technology. It is essential for AI regulatory bodies and existing dual-use frameworks to start a dialogue on how to collaborate and define a response mechanism urgently, while the capabilities and, therefore the risks of advanced AI grow rapidly.

Given the strong dependency of advanced AI on physical compute resources, an alternative dual-use framework for advanced AI is to control the export and use of these resources, such as sophisticated AI chips. Even though this suggests a more tractable approach than governing a software technology, this framework should be able to target a risky accumulation and utilisation of these resources rather than a blanket export ban in order not to strain the beneficial development of this general purpose technology. More R&D is required on existing chip technology to enable such smart monitoring capabilities. The compute resources needed for advanced AI development are also subject to change as the technology advances. Therefore, a dual-use framework on compute resources would only be effective if the chips are enhanced with necessary developments and the framework itself is robust enough to update its thresholds with the advancements in technology.

Regardless of aiming directly for advanced AI or compute resources, the dialogue on dual-use framing of advanced AI should start at an international forum, given the risks posed are at a global scale. This would also promote the democratic governance and oversight of this transformative technology, ensuring that its evolution is shaped by global consensus rather than being driven by a few key players in the AI industry. The UN and G7 both have initiatives to define best practices in AI governance, and are directly or indirectly involved in existing dual-use frameworks, such as the Chemical nonproliferation or Wassenaar export controls on dual-use items. The AI Safety Summit series, on the other hand, has the objective of coordinating an international response to advanced AI risks and has been building ties with the industry and technical experts in the field. These forums present opportunities to start a sustainable dialogue on the dual-use framing of advanced AI and enhance international coordination on this topic.