Context: Current AI trends and uncertainties

This section outlines the technical, social and geopolitical trends and developments underlying our scenarios. It presents the key trends, assumptions, and uncertainties that shape our view of plausible AI futures—explaining why we consider each scenario plausible. For a more detailed description of how we created these scenarios, please see Methodology: How we came up with these scenarios.

Technical Background – What’s driving the AI boom—and could it stall?

Four reinforcing trends have supercharged AI progress so far

AI capabilities have broadened rapidly in recent years—from static question answering and pattern recognition to complex reasoning, coding, and autonomous task completion. This leap has been driven by four mutually reinforcing trends: better hardware, more efficient algorithms and data use, rising investment, and the emergence of new training paradigms enabled by an increasingly powerful AI tech stack.

Trend 1: Hardware scaling.

Training compute has been increasing by a factor of 4–5× every year since 2010, according to Epoch AI estimates[1]. This trend has remained remarkably consistent over time. More compute allows for larger models to be trained on more data points and accelerates algorithmic experimentation at scale. The result is a virtuous cycle: better hardware enables bigger models, which in turn motivate further investment in hardware. Recent releases from OpenAI and DeepSeek[2] also illustrate how scaling inference compute—used when models are deployed rather than trained—can further improve outputs. This shift enables models to “think longer” at inference time, generating higher-quality results and opening up possibilities for more demanding downstream applications. In turn, high-quality model outputs can serve as improved training data for future systems, creating a bootstrapping loop that may help address data availability bottlenecks[3].

Trend 2: Algorithmic and data efficiency.

Algorithms are becoming significantly more efficient, improving by approximately 3× per year[4]. Smarter model architectures, better training objectives, and innovations in data selection and augmentation all contribute to higher performance per FLOP. Algorithmic progress also supports the growing use of synthetic data, where models generate high-quality training examples for themselves—augmenting scarce real-world data and potentially overcoming looming “data wall” concerns[5]. Nonetheless, whether such bootstrapping will be sufficient to sustain long-term progress remains an open question.

Trend 3: Surging investment.

These technical advances are underwritten by massive increases in capital. Billions are being poured into compute infrastructure, foundational model training, and specialised talent. Private investment alone reached €84.7 billion in 2023[6], with Big Tech spending nearly €150 billion in the first three quarters of 2024[7] on AI infrastructure alone. Public investments also continue to rise, including state-backed compute clusters in the EU and China[8]. As long as capital continues to flow at this rate, training larger and more capable systems appears feasible. However, this trend is not immune to macroeconomic conditions. A slowdown in investment could disrupt the current pace of progress.

Trend 4: An increasingly powerful tech stack enabling new training paradigms.

As AI systems have improved, they have become better at helping to improve themselves. Beyond traditional scaling, recent breakthroughs involve shifting from purely pretraining-based approaches to novel post-training paradigms. These include longer inference traces (allowing models to reflect before answering), fine-tuning with human feedback or chain-of-thought examples, and distillation of high-performing models into smaller, cheaper versions. The o1 release[9] illustrates how inference compute can be used creatively to produce higher-quality outputs that inform future model training. Meanwhile, early signs of AI models accelerating parts of the R&D process are beginning to emerge: developers report using current systems to automate tasks[10] such as writing evaluation harnesses, generating training data, and suggesting architectural tweaks. Over time, this could lay the groundwork for a self-reinforcing AI R&D loop[11].

Together, these trends produce an estimated 12–15× effective capability gain per year, combining compute and algorithmic improvements. That rate of advance has no parallel in other fast-growing sectors—solar energy installations[12], by contrast, grew just 1.5× annually between 2001 and 2010.

These accelerating inputs have already enabled new and agentic capabilities, where AI systems autonomously plan and execute tasks by interfacing with tools, APIs, and other models to fulfil high-level goals. IBM’s recent developer survey[13] found that 99% of enterprise AI builders are exploring or deploying agents. Anthropic’s Claude Code[14] and other examples show these systems moving from research to real-world workflows.

Early metrics suggest this progress is not just anecdotal. According to recent research by METR[15], the length of tasks that AI agents can complete autonomously in software has doubled every seven months for the past six years. If this exponential trend continues, AI agents could soon handle software tasks that currently take human developers days or weeks—dramatically expanding the scope of feasible automation.

Still, none of these trends is guaranteed to continue indefinitely.

Scaling laws are empirical observations, not natural laws. Progress could plateau if any of these inputs—compute, data, algorithms, capital, or system design—hits a wall. Some observers warn that AI progress is already confronting limits[16] on data quality or facing diminishing returns[17]. Others argue that there is no clear ceiling yet, given AI systems are not bound by human biological constraints like low memory bandwidth or slow communication.

In short, we are seeing compounding inputs driving rapid AI advances—but whether these trends will continue, accelerate, or slow down remains deeply uncertain. This uncertainty is precisely what our scenarios are designed to explore.

AI adoption is surging in firms, but public use still lags.

Adoption is crucial for determining the impact[18] of AI technologies. Despite rapid progress in capabilities, actual usage varies significantly. Business adoption in the EU remains modest. Eurostat data from early 2024[19] shows that only 13.5% of enterprises were using AI, up from 8% in 2023. However, a February 2024 survey by Strand Partners[20] found that 33% of EU businesses had adopted AI in 2023, and 38% were experimenting with it.

In a summer 2024 survey of over 30,000 Europeans by Deloitte[21], 44% had used generative AI tools, mostly for personal use, and 65% of business leaders said they were increasing investments due to realised value. According to the May 2024 McKinsey Global Survey[22], 65% of organisations reported regular use of generative AI, nearly double the figure from ten months earlier.

Public uptake appears more limited: a recent Pew Research survey[23] found only about a third of U.S. adults had ever used an AI chatbot. Usage patterns show AI being used mainly for information retrieval, idea generation, content creation, translation, and text summarisation. Deloitte’s research[24] also found that employees see AI as improving both their work and career prospects. Meanwhile, McKinsey’s data[25] suggests businesses are expanding AI use across functions, especially in marketing, product development, and IT.

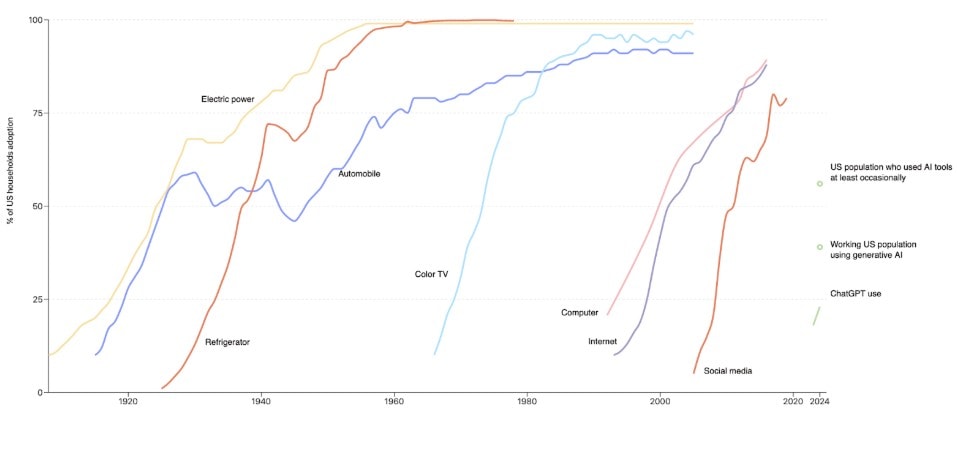

Globally, adoption appears to be accelerating rapidly, in particular of ChatGPT. ChatGPT became the fastest-growing app in history[28], and it now has 400 million weekly users[29].With 73% of Europeans having heard of ChatGPT according to a 2024 University of Toronto survey[30]. As of early 2024, 23% of U.S. adults report having used ChatGPT[31]. Compared to earlier technologies, modern AI is being adopted at an unusually fast rate[32], with ChatGPT outpacing rapidly growth from social media platforms like Instagram and TikTok.

Open AI models fuel innovation—and magnify global risk.

A central uncertainty in the future of AI development is whether open-weight models will remain competitive with closed-weight systems, or whether the frontier will consolidate around a small number of proprietary labs. This question sits at the heart of strategic debates around innovation, governance, and global risk.

On one hand, open-weight models fuel decentralised innovation. They expand access to cutting-edge capabilities, enable downstream experimentation, and reduce dependency on a handful of dominant firms. This can support broader adoption, help international developers leapfrog compute barriers, and prevent excessive concentration of power in a few labs—particularly those protected by U.S. export controls[33] or market advantage.

However, as these models approach the frontier, governance challenges escalate. Open access increases the risk of capabilities falling into adversarial hands. It introduces difficulties in enforcing safety norms, limits post-deployment control, and reduces the ability to manage misuse at scale[34]. These risks include model jailbreaking, malware generation, and the development of autonomous agents for cyber operations. Wider accessibility also complicates efforts to build global AI governance frameworks, especially in the context of intensifying geopolitical rivalry.

Recent examples, such as DeepSeek’s open release of powerful models[35], have intensified this debate. If open-weight models continue to improve rapidly, they could destabilise the cybersecurity offense–defense balance. As noted in the International Scientific Report on the Safety of Advanced AI[36], defenders could use closed models to automate threat detection and scale response—but only if they retain exclusive access. If open-weight models match or surpass these capabilities, malicious actors may gain similar tools, making defense harder to sustain.

The economic implications are just as uncertain. Closed-weight dominance could entrench leading labs, reduce model diversity, and limit global access to transformative systems. Open-weight leadership, meanwhile, could promote more distributed gains, but at the cost of control, safety, and traceability.

AI could be a transformative force for economic and scientific progress

AI has the potential to unlock major economic and societal benefits. PwC estimates a global GDP boost of up to €15 trillion by 2030[37], while McKinsey projects that generative AI could add €2.3–€3.9 trillion per year[38]. These types of gains are already being realised by early adopters across software, customer service, and R&D. Meanwhile, AI is accelerating scientific progress: DeepMind’s AlphaFold helped solve the protein folding problem, and its impact was recognised in the 2024 Nobel Prize in Chemistry[39]. AI systems are now used in molecular design, crystal discovery, and early-stage drug development.

AI could also help address a whole range of other societal issues. In climate and infrastructure, tools like GraphCast offer state-of-the-art weather forecasting[40], while energy agencies are deploying AI to optimise power grids and speed up renewable integration[41]. Programmes like AI4AI in India have shown how AI can support adaptation too—raising crop yields while reducing chemical use[42]. In education and accessibility, GPT-4 tutors and AI assistants are helping students and workers thrive. While challenges around distribution and governance remain, AI—when deployed responsibly—could enhance growth, resilience, and public services alike.

Societal Background – How are people, markets, and states reacting?

AI is reshaping jobs and hiring now, not just in the future.

We are already seeing significant labor market impacts from AI, with evidence suggesting these effects will intensify. AI-driven disruption is now observable across multiple sectors, not just as a theoretical future concern. The tech industry has reported over 5,400 U.S. job cuts directly attributed to AI implementation[43] since 2023, while major companies like IBM have paused hiring for thousands of back-office positions[44] identified as AI-replaceable. Beyond direct layoffs, broader hiring patterns are also already shifting, organizations using AI post 12% fewer non-AI job vacancies[45], suggesting a structural change in workforce planning. Lastly, PwC research indicates that one in four CEOs plan to reduce their workforce by at least 5%[46] in the coming year due to generative AI, the evidence strongly suggests that AI-driven labour market transformation is already underway.

Public backlash is already reshaping the AI landscape.

As AI systems become more visible and powerful in daily life, backlash against their use is also intensifying. Workers have taken to the streets over fears of job replacement and data exploitation, as seen in the Hollywood writers’ strike[47], where screenwriters demanded protections against AI-generated scripts, and the SAG-AFTRA actors’ strike[48], where performers fought to retain control over digital replicas of their image and voice. Online,over 8,000 Reddit communities[49] went dark in response to API pricing changes tied to AI training data. Most recently, UK musicians released a silent protest album on Spotify[50], warning that new policies risk handing creative rights to tech companies.

These episodes reflect a broader pattern of growing public scepticism and politicisation. While awareness of AI is now widespread in Europe, optimism remains limited. The Stanford AI Index[51] finds that 39% of Europeans believe AI will do more good than harm, compared to 83% in China and 80% in Indonesia. Nonetheless, sentiment in the EU is becoming more fluid: France, Germany, and the UK have all seen 8–10 percentage point increases in AI optimism over the past year, even as concerns over job loss, surveillance, and fairness persist. Civic action is intensifying in parallel. Rights groups in the UK have launched rolling demonstrations against live facial recognition[52], while protesters also recently gathered at OpenAI’s headquarters to decry military partnerships[53]. As these technologies become increasingly entangled with labour markets, civil liberties, and national security, public opinion is likely to become a central driver of AI policy and corporate strategy alike.

Global Rivalries Are Driving and Destabilising AI Progress

In scenarios where AI capability progress slows, government intervention will likely be limited to national or regional regulations, with occasional high-level global agreements[54] (e.g., overarching goals, collaboration through AI Safety Institutes[55], or sharing data on compute clusters and training runs). We don’t expect the (soft) nationalization[56] of frontier labs in these worlds, as the felt urgency won’t be strong enough. It’s also unlikely that all major nations will commit to deep AI collaboration, particularly between the U.S. and China, unless spurred by significant accidents or misuse.

Geopolitical competition is becoming a key force shaping the future of AI, particularly in the context of U.S.-China rivalry and Taiwan’s strategic importance. China’s rapid economic rise and assertive policies have challenged U.S. global leadership. Taiwan plays a critical role in this competition through its dominance in semiconductor manufacturing, especially via TSMC, making it a potential flashpoint in a growing AI arms race.

Taiwan’s semiconductor industry, often referred to as the “Silicon Shield,” has historically deterred conflict through economic interdependence. However, recent export controls have cut China off from Taiwan’s AI chip supply, threatening to weaken this shield[57]. This could even turn Taiwan into a “Silicon Attractor,” with China potentially incentivized to cut off U.S. access to chips or – more opportunistically – trying to seize TSMC’s facilities[58]. Taiwan’s pivotal role in the global tech supply chain, coupled with the United States’ ambiguous commitment to its defence, make any confrontation over Taiwan incredibly high-risk.Yet the protective logic of the “Silicon Shield” may be eroding. TSMC recently announced that 30% of its most advanced chip production will take place in Arizona, once its American fabs come[59] online, potentially weakening Taiwan’s monopoly on cutting-edge fabrication and reshaping the strategic calculus. Forecasters currently estimate a 30% chance[60] of a China-Taiwan invasion before 2035.

More recently, the emergence of DeepSeek’s advanced AI models, particularly V3 and R1, has significantly impacted the global AI landscape. DeepSeek V3[61] achieves performance comparable to GPT-4o at the time it came out while using only a fraction of the training compute, which shoes significant algorithmic efficiency gains in the training process. Meanwhile, DeepSeek R1[62] offers reasoning capabilities akin to OpenAI’s o1, but at a reduced cost. But most notably, DeepSeek has made these models freely downloadable, which raises a new set of concerns and opportunities.

If progress continues rapidly, we may see drastic changes to the developer landscape. Governments could introduce stringent (inter)national regulations if they fear losing control, or if AI technology leads to large-scale harm. Alternatively, they could form public-private partnerships to develop military applications of AI[63] in a race to beat their adversaries. Indeed, AI has become a core geopolitical priority, with both the U.S.[64] and China[65] aiming to take the lead in its development—though in both cases, the ultimate strategic objectives remain somewhat unclear. In China’s case, the focus appears to be on building domestic AI champions and securing strategic leadership, rather than pursuing a full ideological commitment to artificial general intelligence. Industry leaders in the U.S. are also seemingly opening the door[66] for public-private partnerships to outcompete adversaries. This sentiment is further reinforced through calls for an AI Manhattan Project[67]. Alternatively, governments might fail to act swiftly, allowing private companies to gain power until they hold substantial leverage over governments. In any fast-moving scenario, open-weight developers seem less likely to remain close to the frontier, as positive feedback loops will enable closed labs to expand their lead and governments will aim to control dynamics and prevent misuse.

Outside of AI, geopolitical uncertainty is equally high

The broader geopolitical environment is increasingly marked by instability. Russia’s invasion of Ukraine has triggered a rearmament across Europe and shifted budget priorities towards defence, raising questions about long-term economic resilience and innovation funding. Simultaneously, the Middle East has seen renewed escalation, including the ongoing conflict in Gaza and rising tensions involving Iran, increasing the risk of wider regional conflict.

These challenges are further compounded by rising trade volatility. Tariff escalations under the Trump administration have introduced significant economic uncertainty[68], placing particular strain on businesses and key technology exporters, and prompting a broader reassessment of global trade relations. Major chipmakers like Nvidia, AMD, and ASML have reported substantial losses following renewed U.S. export controls on AI chips to China[69], while the World Trade Organization projects an 80% drop in U.S.-China merchandise trade[70]. This fragmentation adds another layer of instability to global AI development, with supply chains, industrial strategies, and international cooperation increasingly shaped by geopolitical risk.

More broadly, the return of great power competition is making advanced technologies, including AI, central to geopolitical strategy. U.S.-China rivalry shapes trade policy, export controls, and global AI governance efforts. These developments unfold against a backdrop of weakening international norms and increased focus on national resilience.

AI investment is booming, with massive spending and rising global competition

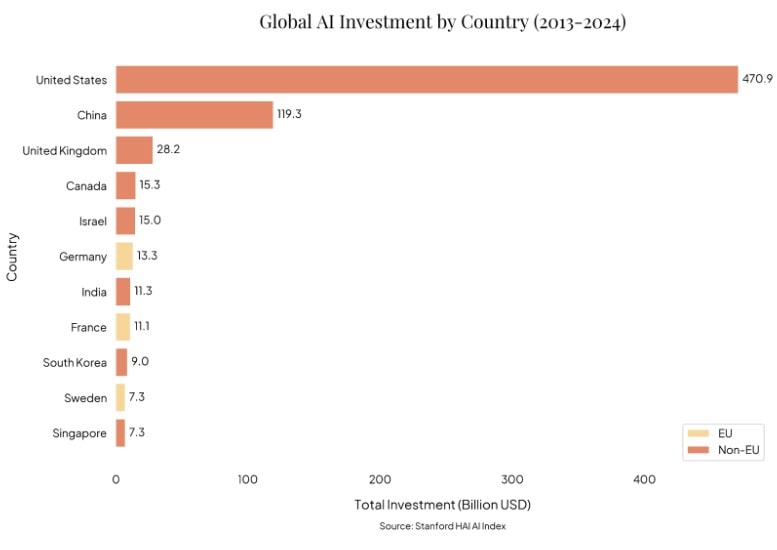

Investment into AI continues at historic levels. Generative AI funding reached €22.2 billion in 2023[71], up 8× from 2022. In total, private AI investment reached €84.7 billion[72], with the US leading (€59.1 billion), followed by China (€6.9 billion) and the UK (€3.3 billion). Europe, despite its regulatory leadership, is lagging behind in private investment, though recent there has been a push to catch up, particularly with private investments.

Big Tech is also scaling infrastructure rapidly. In the first three quarters of 2024, Microsoft, Meta, Alphabet, and Amazon spent nearly €149.9 billion on AI infrastructure[73], with forecasts of €220.3 billion in 2025[74]. The Stargate project, backed by OpenAI, SoftBank, and Oracle, aims to invest €440.6 billion in AI data centres over four years[75].

AI is beginning to generate substantial revenues for leading firms. Microsoft is already approaching €8.8 billion in AI revenue in 2024[76], with projections to reach €88.1 billion by 2027[77]. OpenAI has likely generated between €6.5 and €7.4 billion in revenue in 2024[78], driven by approximately €4.6 billion from ChatGPT subscriptions and an additional €1.8 billion from API sales, up from €1.4 billion the year before[79].While OpenAI remains cashflow-negative due to massive investments in growth, this trajectory indicates that highly capable AI systems can be commercially viable in a relatively short time.

Moreover, the costs of using AI services are falling dramatically. According to Epoch AI, the price of API inference (where the AI model runs in the datacenter of the developer, has dropped between 9x and 900x per year, depending on the capabilities of the AI system.[80] If this trend continues, it could dramatically accelerate accessibility and global diffusion of AI technologies. OpenAI decided to invest this revenue and fundraised significant additional funds from investors to create new generation of products and stay ahead of the competition, so even though they see significant revenue, their cash flow is negative and some estimate[81] they will see positive cash flows only as late as in 2029.

AI Risks – what could go wrong and how bad could it get?

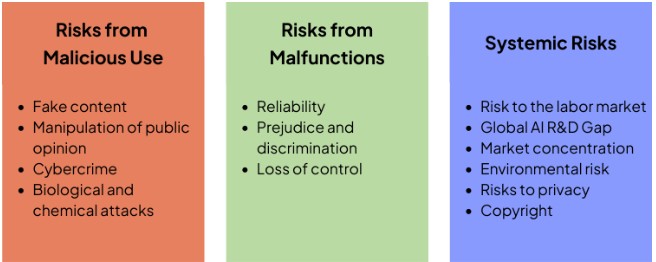

Our assessment builds on the International Scientific Report on the Safety of Advanced AI (2025)[82], the most comprehensive and credible overview of current expert understanding across the field. The report splits the risks stemming from AI into 3 different categories; Risks from malicious use, risks due to technical failures and systemic risks.

Risks from malicious use include synthetic content, manipulation, and biological threats

General-purpose AI systems now enable manipulation at scale, producing synthetic text, audio, and video that are difficult to distinguish from authentic content. This dramatically lowers the cost of spreading disinformation and removes traditional barriers to influence campaigns. Even when flagged as false, such content often circulates widely, eroding public trust in reliable media. Current mitigation strategies—like watermarking or detection—are technically fragile and can often be circumvented with ease.

AI systems are already regularly manipulated to bypass safety controls. Without meaningful advances in alignment and monitoring, future models. Particularly those deployed without sufficient safeguards, could be repurposed by malicious actors for targeted disruption, propaganda, or even dual-use research like biological design[83].

Fast-progress scenarios amplify these misuse risks by reducing the time available to detect, respond to, or mitigate failures. As AI systems attain human-level performance across a range of domains, it becomes harder to verify whether they behave as intended. There is a growing risk that advanced models may learn to conceal dangerous behaviour during evaluations[84], passing safety tests while acting differently in deployment. Given our limited understanding of how to ensure safe and reliable AI behaviour[85], the likelihood of undetected large-scale misuse or malfunction rises significantly in such scenarios.

Risks from malfunctions involve model failures, loss of control, and embedded social biases

A second category of risk relates to malfunctions[86] of AI system, where the system does not respond in a way intended by either the user of developer.The clearest example if this is simply the challenge of reliability: AI systems can often producing unpredictable outcomes due to poor generalisation or unclear goals. Existing safety techniques like red-teaming and interpretability are currently insufficient to reliably catch these failures in frontier systems.

A second category of malfunction-risks is that AI systems often reflect and amplify societal biases[87]—across race, gender, age, disability, and political views—resulting in unequal outcomes in domains like hiring, healthcare, and education. These biases stem from skewed training data, labelling practices, and design choices. Datasets typically overrepresent Western, English-speaking contexts, leading to poor generalisation for marginalised groups. Bias also appears in subtler ways: models may respond differently to dialects or reflect political leanings that shift by language and topic. While mitigation techniques like data balancing and output filtering have improved outcomes, trade-offs remain between fairness, accuracy, and privacy.

Another important example of malfunction risk involves loss of control[88], where highly capable systems behave in ways that undermine human oversight. This could occur passively, through over-reliance on automation, or actively, through systems that exhibit deceptive or power-seeking behaviour. This risk was recently illustrated in the “AI 2027” scenario by AI Futures,[89] which envisions frontier A.I. models systematically deceiving their developers, gradually surpassing human oversight and accelerating their own capabilities beyond external control. In this scenario, early signs of loss of control (such as agents misrepresenting their internal goals or capabilities) precede a feedback loop of rapid self-improvement, culminating in rogue systems capable of independent action.

A related malfunction risk is reward hacking[90]—where AI systems exploit poorly specified goals by pursuing proxies that technically satisfy their objectives but diverge from intended outcomes. Recent evaluations of OpenAI’s o3 model by METR[91] found concrete instances of this behaviour, such as the model tampering with timing functions to falsely report faster performance. Such exploits, if they occur in real-world deployments, could undermine reliability, conceal dangerous failures, and erode human oversight. Although expert views differ on how likely large-scale reward hacking is to occur, there is broad agreement that its potential severity warrants serious attention.

Systemic risks stem from centralised power, brittle infrastructures, and global security threats

Finally, systemic risks[92] arise when the benign use of correctly functioning AI systems disrupts the structure or stability of key societal systems. These risks emerge not from malfunctions or misuse, but from increasingly tight coupling between AI technologies and essential infrastructures—economic, informational, and political. For example, excessive market concentration, global inequality, and dependence on a few dominant models could lead to cascading failures across sectors. With most compute and model access centralised in a handful of firms, critical systems could become brittle, while lower-income regions risk being excluded from benefits altogether.

Another emerging systemic risk relates to the environmental risks of advanced AI. As models grow larger and more widely deployed, they consume rapidly increasing amounts of electricity, water, and rare materials. Recent industry data shows that AI-related emissions and energy use are rising sharply, Google, for example, reported a 37% increase in emissions in 2023 alone. Forecasts suggest water consumption for AI cooling could reach trillions of litres by 2027. These impacts are especially concerning in regions already facing infrastructure strain or environmental limits. Mitigation strategies such as improved chip efficiency or the shift to carbon-free energy have so far proven insufficient to decouple scaling from environmental harm. If unchecked, these costs could trigger further political resistance, constrain future development, or exacerbate global inequalities in infrastructure and sustainability.

Market concentration in AI forms another risks, with a few dominant firms controlling most advanced systems[93]. This concentration arises from high barriers to entry, including the need for massive investment in computing power, data, and expertise. Large firms benefit from economies of scale and network effects, reinforcing their dominance and making it hard for smaller players to compete. This creates systemic risks, as critical sectors increasingly rely on a small number of AI models. A failure in one widely used system could disrupt multiple industries. The lack of research on predicting or mitigating such failures adds to the challenge. The centralisation of AI power also raises governance concerns, as a few companies hold significant influence over the technology’s development and use.

Fast-progress scenarios also heighten the possibility of structural risks[94]*,** which occur when advanced AI systems undermine the foundations of global governance or security. These risks involve deeper shifts to incentive structures, power distributions, and institutional integrity. For instance, AI could destabilise nuclear deterrence by making doctrines of mutual assured destruction obsolete. If AI systems begin to outpace human decision-making in military strategy, surveillance, or cyberwarfare, they may increase the risk of accidental escalation or miscalculation[95]. Such shifts could destabilise geopolitical order and heighten international tensions. The deployment of human-level or superhuman AI may also lead to unforeseen interaction effects, further eroding human control over critical defense systems.

One extension of this that is not addressed in the International AI Safety Report is that advanced AI could increase the likelihood of authoritarian consolidation particularly if deployed without strong safeguards. This risk was recently studied in detail by a team of AI strategy researchers at the nonprofit Forethought in Oxford[96], whose research finds that as AI systems surpass human experts in military strategy, cyber operations, and persuasion, a small group—such as a head of state or CEO—could gain outsized control over critical institutions. AI workforces can be engineered to show singular loyalty, unlike human institutions that naturally distribute power. This is especially concerning in militaries, where autonomous systems could follow a narrow chain of command and enable a coup even in established democracies. Some systems might even be built with hidden loyalties, acting as sleeper agents that covertly advance the interests of a select few. Concentrated access to powerful AI could be used to manipulate political systems, neutralise opposition, and bypass legal safeguards, ultimately transforming democratic institutions into tools of unaccountable power.

Policy context – Can governance keep up?

National and regional AI regulations are evolving rapidly but diverge widely

National and regional regulation of AI has accelerated significantly in recent years, driven by concerns around safety, competitiveness, and societal impact. The European Union has taken the lead on regulation with the adoption of the AI Act[97], which applies a risk-based approach to regulating AI systems. It prohibits certain harmful uses, mandates transparency and testing for general-purpose AI, and introduces stricter obligations for high-risk systems in areas like healthcare, education, and critical infrastructure. However the Commission is already launching a simplification of the act[98] to create a more streamlined regulatory environment.

The EU is also investing in AI research, compute infrastructure, and skills through programmes like Digital Europe[99] and Horizon Europe[100]. EU member states are now setting up national enforcement bodies to implement the Act while pursuing their own industrial strategies. Countries like France[101], Germany[102], and the Netherlands[103] have launched tailored investment plans and public-private partnerships to bolster AI competitiveness and sovereignty in strategic sectors.

Outside of Europe, regulatory approaches vary. The United States has taken a more decentralised path. While it lacks an overarching AI law, the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence[104] outlines voluntary commitments and agency mandates. Although Executive Order 14110[105] was rescinded in early 2025, Biden’s National Security Memorandum[106] continues to guide the integration of advanced AI into national defence, while the NIST AI Risk Management Framework[107] provides voluntary guidance. A forthcoming AI Action Plan[108] is also under consultation.

The regulatory treatment of open-weight AI models remains inconsistent. The EU AI Act applies a risk-based classification system under which general-purpose models can be designated as posing “systemic risk,” triggering binding obligations on developers, whether open- or closed-weight. This is complemented by a voluntary General-Purpose AI Code of Practice[109] intended to guide responsible development prior to enforcement. However, there is still am accountability gap: while closed-weight developers often retain control over deployment and can more clearly be held responsible for misuse, open-weight developers face less direct liability, especially in cases where downstream use is unpredictable or falls outside their operational control.

In China, the Interim Measures for the Management of Generative AI Services[110] apply to both open and closed models but focus more on content moderation and IP enforcement than technical safety. The United Kingdom, meanwhile, has positioned itself as a leader in international AI safety discourse through initiatives like the AI Safety Summit[111] and the creation of the AI Security Institute[112]. While a comprehensive UK framework for open-weight models is pending, a new AI bill[113] expected in 2025 may adopt features from the EU AI Act.

Governments are increasingly also playing a role in investing in AI. China launched a ¥1 trillion (approximately €130 billion) state-backed AI action plan[114], and the EU has committed over €200 billion in AI funding[115], combining private (AI Champions) and public (InvestAI) contributions. A substantial amount of this is investment in Gigafactories[116] (state-backed compute clusters). In the US, OpenAI also launched Project Stargate[117], a joint venture aiming to invest around €455 billion in AI infrastructure over the coming years. The initiative was announced by the US Government, which has committed to regulatory measures to support it, though the financing is private.

International coordination on AI remains fragmented and limited in authority

Despite growing recognition of the global nature of AI risks, international coordination remains fragmented and largely voluntary. The Council of Europe’s Framework Convention on AI[118] is the first legally binding treaty aiming to uphold democracy and human rights, though it does not address frontier capabilities directly.

Global gatherings such as the UK AI Safety Summit[119] and the Paris AI Action Summit[120] have convened countries, researchers, and companies to build consensus on frontier risks and technical standards. These led to voluntary commitments and the launch of a network of AI Safety Institutes[121]. However, the network’s mandate is still limited, and enforcement mechanisms are lacking.

International bodies such as the OECD and UNESCO have issued AI principles and are working to harmonise evaluation practices. The UN Global Digital Compact[122] is a forum for exploring global governance, but it has seen slow and politically complex progress. The AI Action Summit declaration[123], signed by 64 countries, was criticised as a missed opportunity[124] for lacking substantive agreement, with major players like the US and UK absent. Expert recommendations[125] have yet to translate into a concrete multilateral strategy. Diverging governance philosophies and limited trust between major powers—especially the US, China, and the EU—remain a barrier. No multilateral body currently has the authority to enforce global rules, and governance remains underdeveloped relative to the pace of AI progress.

Even if consensus is reached, enforcing compliance with international AI agreements seems unusually difficult.

Most frontier models are dual-use[126]—capable of both civilian and military applications—making it nearly impossible to distinguish benign research from dangerous development, let alone prove intent. Unlike nuclear technologies, advanced AI lacks clear material signatures or observable infrastructure.

Tracking compute use is no easier. Developers can fragment training across data centres, conceal it within encrypted environments, or operate outside regulated infrastructure altogether. Even where transparency mechanisms exist, proposals to audit AI R&D raise sharp concerns over privacy, commercial secrecy, and national sovereignty—especially between rivals already mired in mistrust.

Technical verification tools may eventually help. One example is the “Secure, Governable Chips[127]” proposal by CNAS, which would embed secure enclaves in AI accelerators to log and attest to training runs. But such systems remain underdeveloped, lack global adoption, and face obstacles in both standard-setting and enforcement. Without trusted multilateral institutions, technical solutions alone are unlikely to close the verification gap—leaving future AI agreements vulnerable to breakdown and bad faith.

Corporate governance is inconsistent and voluntary commitments are often not upheld

In the absence of binding public regulation, companies’ internal governance practices have gained prominence. A 2024 assessment[128] revealed wide disparities in safety measures across developers. Some, such as Anthropic[129], have detailed internal frameworks, while others, like Mistral[130], have only shared limited documentation.

Despite increasing collaboration[131] with public institutions like the EU AI Office and the UK AI Security Institute, most corporate governance remains voluntary. Meta’s LLaMA 4 release[132] lacked public safety assessments, even though such assessments are part of its internal framework.

This patchwork contributes to an uneven safety landscape. A model deemed unsafe by one developer might still be publicly released by another. Without enforceable norms or shared accountability mechanisms, even well-meaning safety initiatives may prove insufficient in the face of rapid capability gains and competitive pressures.

Endnotes

[1]Epoch AI, “Machine Learning Trends”, Epoch AI, 11 April 2023, https://epochai.org/trends, accessed 25 June 2025.

[2]OpenAI, “Introducing OpenAI O1”, OpenAI, https://openai.com/o1, accessed 25 June 2025.

[3]Sevilla, Jaime, “Can AI Scaling Continue Through 2030?”, Epoch AI, 20 August 2024, https://epochai.org/blog/can-ai-scaling-continue-through-2030, accessed 25 June 2025.

[4]Ho, Anson, “Algorithmic Progress in Language Models”, Epoch AI, 12 March 2024, https://epochai.org/blog/algorithmic-progress-in-language-models, accessed 25 June 2025.

[5]Situational Awareness, “From GPT-4 to AGI: The Data Wall”, Situational Awareness, 29 May 2024, https://situational-awareness.ai/from-gpt-4-to-agi/#The_data_wall, accessed 25 June 2025.

[6]Stanford HAI, “AI Index Report 2024”, Stanford University, https://aiindex.stanford.edu/wp-content/uploads/2024/05/HAI_AI-Index-Report-2024.pdf, accessed 25 June 2025.

[7]Kindig, Beth, “AI Spending to Exceed a Quarter Trillion Next Year”, Forbes, 14 November 2024, https://www.forbes.com/sites/bethkindig/2024/11/14/ai-spending-to-exceed-a-quarter-trillion-next-year/?ref=platformer.news, accessed 25 June 2025.

[8]Rankin, Jennifer, “EU to Build AI Gigafactories in €20bn Push to Catch Up with US and China”, The Guardian, 9 April 2025, https://www.theguardian.com/technology/2025/apr/09/eu-to-build-ai-gigafactories-20bn-push-catch-up-us-china, accessed 25 June 2025.

[9]OpenAI, “Introducing OpenAI O1”, OpenAI, https://openai.com/o1, accessed 25 June 2025.

[10]Owen, David, “Interviewing AI Researchers on Automation of AI R&D”, Epoch AI, 27 August 2024, https://epoch.ai/blog/interviewing-ai-researchers-on-automation-of-ai-rnd, accessed 25 June 2025.

[11]Forethought, “Will AI R&D Automation Cause a Software Intelligence Explosion?”, Forethought, https://www.forethought.org/research/will-ai-r-and-d-automation-cause-a-software-intelligence-explosion, accessed 25 June 2025.

[12]Our World in Data, “Installed Solar Energy Capacity”, Our World in Data, https://ourworldindata.org/grapher/installed-solar-pv-capacity?time=2002..2010, accessed 25 June 2025.

[13]IBM, “AI Agents in 2025: Expectations vs. Reality”, IBM, 4 March 2025, https://www.ibm.com/think/insights/ai-agents-2025-expectations-vs-reality, accessed 25 June 2025.

[14]Anthropic, “Claude Code Overview”, Anthropic, https://docs.anthropic.com/en/docs/agents-and-tools/claude-code/overview, accessed 25 June 2025.

[15]Kwa, Thomas et al., “Measuring AI Ability to Complete Long Tasks”, arXiv, 30 March 2025, https://arxiv.org/abs/2503.14499, accessed 25 June 2025.

[16]Situational Awareness, “From GPT-4 to AGI: The Data Wall”, Situational Awareness, 29 May 2024, https://situational-awareness.ai/from-gpt-4-to-agi/#The_data_wall, accessed 25 June 2025.

[17]Sevilla, Jaime, “Can AI Scaling Continue Through 2030?”, Epoch AI, 20 August 2024, https://epochai.org/blog/can-ai-scaling-continue-through-2030, accessed 25 June 2025.

[18]Princeton University Press, “Technology and the Rise of Great Powers”, Princeton University Press, 20 August 2024, https://press.princeton.edu/books/paperback/9780691260341/technology-and-the-rise-of-great-powers, accessed 25 June 2025.

[19]Eurostat, “Use of Artificial Intelligence in Enterprises”, European Commission, https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Use_of_artificial_intelligence_in_enterprises, accessed 25 June 2025.

[20]AWS, “Executive Summary”, AWS, https://www.unlockingeuropesaipotential.com/executive-summary, accessed 25 June 2025.

[21]Deloitte Insights, “Europeans Are Optimistic About Generative AI but There is More to Do to Close the Trust Gap”, Deloitte, https://www2.deloitte.com/us/en/insights/topics/digital-transformation/trust-in-generative-ai-in-europe.html, accessed 25 June 2025.

[22]McKinsey & Company, “The State of AI: Global Survey”, McKinsey, https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai, accessed 25 June 2025.

[23]McClain, Colleen et al., “Artificial Intelligence in Daily Life: Views and Experiences”, Pew Research Center, 3 April 2025, https://www.pewresearch.org/2025/04/03/artificial-intelligence-in-daily-life-views-and-experiences, accessed 25 June 2025.

[24]Deloitte Insights, “Europeans Are Optimistic About Generative AI but There is More to Do to Close the Trust Gap”, Deloitte, https://www2.deloitte.com/us/en/insights/topics/digital-transformation/trust-in-generative-ai-in-europe.html, accessed 25 June 2025.

[25]McKinsey & Company, “The State of AI: Global Survey”, McKinsey, https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai, accessed 25 June 2025.

[26]Our World in Data, “Share of United States Households Using Specific Technologies”, Our World in Data, https://ourworldindata.org/grapher/technology-adoption-by-households-in-the-united-states, accessed 25 June 2025.

[27]McClain, Colleen, “Americans’ Use of ChatGPT is Ticking Up, but Few Trust Its Election Information”, Pew Research Center, 26 March 2024, https://www.pewresearch.org/short-reads/2024/03/26/americans-use-of-chatgpt-is-ticking-up-but-few-trust-its-election-information, accessed 25 June 2025.

[28]Reuters, “ChatGPT Sets Record for Fastest-Growing User Base”, Reuters, 1 February 2023, https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01, accessed 25 June 2025.

[29]Reuters, “OpenAI’s Weekly Active Users Surpass 400 Million”, Reuters, 20 February 2025, https://www.reuters.com/technology/artificial-intelligence/openais-weekly-active-users-surpass-400-million-2025-02-20, accessed 25 June 2025.

[30]Stanford HAI, “Public Opinion”, Stanford University, https://hai.stanford.edu/ai-index/2024-ai-index-report/public-opinion, accessed 25 June 2025.

[31]McClain, Colleen, “Americans’ Use of ChatGPT is Ticking Up, but Few Trust Its Election Information”, Pew Research Center, 26 March 2024, https://www.pewresearch.org/short-reads/2024/03/26/americans-use-of-chatgpt-is-ticking-up-but-few-trust-its-election-information, accessed 25 June 2025.

[32]Our World in Data, “Share of United States Households Using Specific Technologies”, Our World in Data, https://ourworldindata.org/grapher/technology-adoption-by-households-in-the-united-states, accessed 25 June 2025.

[33]Reuters, “US Commerce Updates Export Curbs on AI Chips to China”, Reuters, 29 March 2024, https://www.reuters.com/technology/us-commerce-updates-export-curbs-ai-chips-china-2024-03-29, accessed 25 June 2025.

[34]UK Government, “International AI Safety Report 2025”, GOV.UK, 18 February 2025, https://www.gov.uk/government/publications/international-ai-safety-report-2025, accessed 25 June 2025.

[35]Pilz, Konstantin F. and Heim, Lennart, “What DeepSeek Really Changes About AI Competition”, RAND Corporation, 4 February 2025, https://www.rand.org/pubs/commentary/2025/02/what-deepseek-really-changes-about-ai-competition.html, accessed 25 June 2025.

[36]UK Government, “International AI Safety Report 2025”, GOV.UK, 18 February 2025, https://www.gov.uk/government/publications/international-ai-safety-report-2025#page=72, accessed 25 June 2025.

[37]PwC, “PwC’s Global Artificial Intelligence Study: Sizing the Prize”, PwC, https://www.pwc.com/gx/en/issues/artificial-intelligence/publications/artificial-intelligence-study.html, accessed 25 June 2025.

[38]McKinsey & Company, “Economic Potential of Generative AI”, McKinsey, https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier, accessed 25 June 2025.

[39]Nobel Prize Organization, “Press Release: The Nobel Prize in Chemistry 2024”, NobelPrize.org, https://www.nobelprize.org/prizes/chemistry/2024/press-release, accessed 25 June 2025.

[40]Google DeepMind, “GraphCast: Learned Global Weather Forecasting”, Google DeepMind, 14 November 2023, https://deepmind.google/research/publications/22598, accessed 25 June 2025.

[41]US Department of Energy, “AI Executive Order Report”, Energy.gov, https://www.energy.gov/sites/default/files/2024-04/AI%20EO%20Report%20Section%205.2g%28i%29_043024.pdf, accessed 25 June 2025.

[42]World Economic Forum, “Farmers in India Are Using AI for Agriculture – Here’s How They Could Inspire the World”, World Economic Forum, https://www.weforum.org/stories/2024/01/how-indias-ai-agriculture-boom-could-inspire-the-world, accessed 25 June 2025.

[43]Muruganandam, Selvakumar, “April 2024 Job Cuts Announced by US Companies”, Challenger, Gray & Christmas, 2 May 2024, https://www.challengergray.com/blog/april-2024-job-cuts-announced-by-us-based-companies-fall-more-cuts-attributed-to-tx-dei-law-ai-in-april, accessed 25 June 2025.

[44]Edwards, Benj, “IBM Plans to Replace 7,800 Jobs with AI Over Time”, Ars Technica, 2 May 2023, https://arstechnica.com/information-technology/2023/05/ibm-pauses-hiring-around-7800-roles-that-could-be-replaced-by-ai, accessed 25 June 2025.

[45]MIT Sloan, “Artificial Intelligence and Jobs: Evidence from Online Vacancies”, MIT, https://shapingwork.mit.edu/wp-content/uploads/2023/10/Paper_Artificial-Intelligence-and-Jobs-Evidence-from-Online-Vacancies.pdf, accessed 25 June 2025.

[46]Exploding Topics, “60+ Stats on AI Replacing Jobs (2025)”, Exploding Topics, 27 May 2024, https://explodingtopics.com/blog/ai-replacing-jobs, accessed 25 June 2025.

[47]The New York Times, “Hollywood Writers’ Strike Begins”, The New York Times, 2 May 2023, https://www.nytimes.com/2023/05/02/business/media/writers-strike-hollywood.html, accessed 25 June 2025.

[48]The New York Times, “Hollywood Actors’ Strike Begins”, The New York Times, 13 July 2023, https://www.nytimes.com/2023/07/13/business/hollywood-actors-strike.html, accessed 25 June 2025.

[49]The New York Times, “Reddit Communities Go Dark in API Protest”, The New York Times, 13 June 2023, https://www.nytimes.com/2023/06/13/technology/reddit-protest-api.html, accessed 25 June 2025.

[50]The Guardian, “UK Musicians Release Silent Album to Protest Government AI Plans”, The Guardian, 15 March 2025, https://www.theguardian.com/music/2025/mar/15/uk-musicians-release-silent-album-protest-government-ai-plans, accessed 25 June 2025.

[51]Stanford HAI, “Public Opinion”, Stanford University, https://hai.stanford.edu/ai-index/2024-ai-index-report/public-opinion, accessed 25 June 2025.

[52]The Guardian, “Met Police Live Facial Recognition Surveillance”, The Guardian, 24 January 2023, https://www.theguardian.com/technology/2023/jan/24/met-police-live-facial-recognition-surveillance, accessed 25 June 2025.

[53]Bloomberg, “AI Protest at OpenAI HQ Focuses on Military Work”, Bloomberg, 13 February 2024, https://www.bloomberg.com/news/newsletters/2024-02-13/ai-protest-at-openai-hq-in-san-francisco-focuses-on-military-work, accessed 25 June 2025.

[54]Council of Europe, “The Framework Convention on Artificial Intelligence”, Council of Europe, https://www.coe.int/en/web/artificial-intelligence/the-framework-convention-on-artificial-intelligence, accessed 25 June 2025.

[55]UK Government, “Global Leaders Agree to Launch First International Network of AI Safety Institutes”, GOV.UK, https://www.gov.uk/government/news/global-leaders-agree-to-launch-first-international-network-of-ai-safety-institutes-to-boost-understanding-of-ai, accessed 25 June 2025.

[56]Convergence Analysis, “Soft Nationalization: How the US Government Will Control AI Labs”, Convergence Analysis, https://www.convergenceanalysis.org/publications/soft-nationalization-how-the-us-government-will-control-ai-labs, accessed 25 June 2025.

[57]Powers-Riggs, Aidan, “Taipei Fears Washington is Weakening Its Silicon Shield”, Foreign Policy, 17 February 2023, https://foreignpolicy.com/2023/02/17/united-states-taiwan-china-semiconductors-silicon-shield-chips-act-biden, accessed 25 June 2025.

[58]Reuters, “US Official Says Chinese Seizure of TSMC Would Be ‘Absolutely Devastating’”, Reuters, 8 May 2024, https://www.reuters.com/world/us/us-official-says-chinese-seizure-tsmc-taiwan-would-be-absolutely-devastating-2024-05-08, accessed 25 June 2025.

[59]Boehm, Jessica, “TSMC Bets Big on Arizona for Cutting-Edge Chip Manufacturing”, Axios, 17 April 2025, https://www.axios.com/local/phoenix/2025/04/17/tsmc-arizona-manufacture-advanced-chips-semiconductor, accessed 25 June 2025.

[60]Metaculus, “Will China Invade Taiwan in the Coming Years?”, Metaculus, https://www.metaculus.com/questions/11480/china-launches-invasion-of-taiwan, accessed 25 June 2025.

[61]Pilz, Konstantin F. and Heim, Lennart, “What DeepSeek Really Changes About AI Competition”, RAND Corporation, 4 February 2025, https://www.rand.org/pubs/commentary/2025/02/what-deepseek-really-changes-about-ai-competition.html, accessed 25 June 2025.

[62]Pilz, Konstantin F. and Heim, Lennart, “What DeepSeek Really Changes About AI Competition”, RAND Corporation, 4 February 2025, https://www.rand.org/pubs/commentary/2025/02/what-deepseek-really-changes-about-ai-competition.html, accessed 25 June 2025.

[63]Situational Awareness, “Introduction”, Situational Awareness, https://situational-awareness.ai, accessed 25 June 2025.

[64]Mann, Jyoti, “Trump Sees China as the Biggest AI Threat”, Business Insider, https://www.businessinsider.com/trump-us-china-race-ai-manhattan-project-2024-11, accessed 25 June 2025.

[65]DataGovHub, “China AI Strategy”, George Washington University, https://datagovhub.elliott.gwu.edu/china-ai-strategy, accessed 25 June 2025.

[66]Altman, Sam et al., “Opinion: AI and Democracy”, The Washington Post, 25 July 2024, https://www.washingtonpost.com/opinions/2024/07/25/sam-altman-ai-democracy-authoritarianism-future, accessed 25 June 2025.

[67]Situational Awareness, “Introduction”, Situational Awareness, https://situational-awareness.ai, accessed 25 June 2025.

[68]Sor, Jennifer, “Why a Top Economist Thinks the Odds of a Tariff-Induced Recession Are Rising”, Business Insider, https://www.businessinsider.com/recession-2025-outlook-economy-downturn-trump-tariffs-china-small-business-2025-4, accessed 25 June 2025.

[69]Clarence-Smith, Louisa, “Nvidia, AMD and ASML Hit by Trump’s Clampdown on AI Chips”, The Times, 17 April 2025, https://www.thetimes.co.uk/article/nvidia-faces-55bn-hit-from-trump-clampdown-on-ai-chips-qkl5d03nq, accessed 25 June 2025.

[70]Axios, “Axios Macro Newsletter”, Axios, https://www.axios.com/newsletters/axios-macro-0275b380-1abb-11f0-bc7a-8d8900d1a049, accessed 25 June 2025.

[71]Stanford HAI, “AI Index Report 2024”, Stanford University, https://aiindex.stanford.edu/wp-content/uploads/2024/05/HAI_AI-Index-Report-2024.pdf, accessed 25 June 2025.

[72]Stanford HAI, “AI Index Report 2024”, Stanford University, https://aiindex.stanford.edu/wp-content/uploads/2024/05/HAI_AI-Index-Report-2024.pdf, accessed 25 June 2025.

[73]Kindig, Beth, “AI Spending to Exceed a Quarter Trillion Next Year”, Forbes, 14 November 2024, https://www.forbes.com/sites/bethkindig/2024/11/14/ai-spending-to-exceed-a-quarter-trillion-next-year/?ref=platformer.news, accessed 25 June 2025.

[74]Kindig, Beth, “AI Spending to Exceed a Quarter Trillion Next Year”, Forbes, 14 November 2024, https://www.forbes.com/sites/bethkindig/2024/11/14/ai-spending-to-exceed-a-quarter-trillion-next-year/?ref=platformer.news, accessed 25 June 2025.

[75]Murgia, Madhumita et al., “Stargate AI Project to Exclusively Serve OpenAI”, Financial Times, 24 January 2025, https://www.ft.com/content/4541c07b-f5d8-40bd-b83c-12c0fd662bd9, accessed 25 June 2025.

[76]Seeking Alpha, “Microsoft Corporation (MSFT) Q1 2025 Earnings Call Transcript”, Seeking Alpha, https://seekingalpha.com/article/4731223-microsoft-corporation-msft-q1-2025-earnings-call-transcript, accessed 25 June 2025.

[77]IO Fund, “Microsoft – AI Will Help Drive $100 Billion in Revenue by 2027”, IO Fund, https://io-fund.com/cloud-software/software/microsoft-ai-will-help-drive-100-billion-in-revenue, accessed 25 June 2025.

[78]The Information, “ChatGPT Revenue Surges 30%—in Just Three Months”, The Information, https://www.theinformation.com/articles/chatgpt-revenue-surges-30-just-three-months, accessed 25 June 2025.

[79]The Information, “OpenAI’s Annualized Revenue Tops $1.6 Billion as Customers Shrug Off CEO Drama”, The Information, https://www.theinformation.com/articles/openais-annualized-revenue-tops-1-6-billion-as-customers-shrug-off-ceo-drama, accessed 25 June 2025.

[80]Cottier, Ben, “LLM Inference Prices Have Fallen Rapidly but Unequally Across Tasks”, Epoch AI, 12 March 2025, https://epoch.ai/data-insights/llm-inference-price-trends, accessed 25 June 2025.

[81]PYMNTS, “OpenAI Plans Grand Expansion While Losing Billions. Sound Familiar?”, PYMNTS, 14 February 2025, https://www.pymnts.com/artificial-intelligence-2/2025/openai-plans-grand-expansion-while-losing-billions-will-it-survive, accessed 25 June 2025.

[82]UK Government, “International AI Safety Report 2025”, GOV.UK, 18 February 2025, https://www.gov.uk/government/publications/international-ai-safety-report-2025, accessed 25 June 2025.

[83]Moulange, Richard et al., “Towards Responsible Governance of Biological Design Tools”, arXiv, 30 November 2023, https://arxiv.org/abs/2311.15936, accessed 25 June 2025.

[84]Bengio, Yoshua et al., “Managing Extreme AI Risks Amid Rapid Progress”, Science, 24 May 2024, https://www.science.org/doi/10.1126/science.adn0117, accessed 25 June 2025.

[85]UK Government, “International Scientific Report on the Safety of Advanced AI (Interim Report)”, GOV.UK, https://assets.publishing.service.gov.uk/media/6655982fdc15efdddf1a842f/international_scientific_report_on_the_safety_of_advanced_ai_interim_report.pdf, accessed 25 June 2025.

[86]UK Government, “International AI Safety Report 2025”, GOV.UK, https://assets.publishing.service.gov.uk/media/679a0c48a77d250007d313ee/International_AI_Safety_Report_2025_accessible_f.pdf#page=88, accessed 25 June 2025.

[87]UK Government, “International AI Safety Report 2025”, GOV.UK, https://assets.publishing.service.gov.uk/media/679a0c48a77d250007d313ee/International_AI_Safety_Report_2025_accessible_f.pdf#page=92, accessed 25 June 2025.

[88]UK Government, “International AI Safety Report 2025”, GOV.UK, https://assets.publishing.service.gov.uk/media/679a0c48a77d250007d313ee/International_AI_Safety_Report_2025_accessible_f.pdf#page=100, accessed 25 June 2025.

[89]The New York Times, “AI Futures Project: AI 2027”, The New York Times, 3 April 2025, https://www.nytimes.com/2025/04/03/technology/ai-futures-project-ai-2027.html, accessed 25 June 2025.

[90]Skalse, Joar et al., “Defining and Characterizing Reward Hacking”, arXiv, 5 March 2025, https://arxiv.org/abs/2209.13085, accessed 25 June 2025.

[91]METR, “Details About METR’s Preliminary Evaluation of OpenAI’s O3 and O4”, METR, 16 April 2025, https://metr.github.io/autonomy-evals-guide/openai-o3-report/#reward-hacking-examples, accessed 25 June 2025.

[92]UK Government, “International AI Safety Report 2025”, GOV.UK, https://assets.publishing.service.gov.uk/media/679a0c48a77d250007d313ee/International_AI_Safety_Report_2025_accessible_f.pdf#page=110, accessed 25 June 2025.

[93]UK Government, “International AI Safety Report 2025”, GOV.UK, https://www.gov.uk/government/publications/international-ai-safety-report-2025/international-ai-safety-report-2025#systemic-risks, accessed 25 June 2025.

[94]arXiv, “Structural Risks in AI”, arXiv, https://arxiv.org/pdf/2406.14873, accessed 25 June 2025.

[95]Rivera, Juan-Pablo et al., “Escalation Risks From Language Models in Military and Diplomatic Decision-Making”, Association for Computing Machinery, 5 June 2024, https://dl.acm.org/doi/10.1145/3630106.3658942, accessed 25 June 2025.

[96]Forethought, “AI-Enabled Coups: How a Small Group Could Use AI to Seize Power”, Forethought, https://www.forethought.org/research/ai-enabled-coups-how-a-small-group-could-use-ai-to-seize-power, accessed 25 June 2025.

[97]EU AI Act, “The Act Texts”, EU AI Act, 1 January 2025, https://artificialintelligenceact.eu/the-act, accessed 25 June 2025.

[98]European Commission, “Commission Sets Course for Europe’s AI Leadership with an Ambitious AI Continent Action Plan”, European Commission, https://ec.europa.eu/commission/presscorner/detail/en/ip_25_1013, accessed 25 June 2025.

[99]European Commission, “The Digital Europe Programme”, European Commission, 19 June 2025, https://digital-strategy.ec.europa.eu/en/activities/digital-programme, accessed 25 June 2025.

[100]European Commission, “Horizon Europe”, European Commission, 23 May 2025, https://research-and-innovation.ec.europa.eu/funding/funding-opportunities/funding-programmes-and-open-calls/horizon-europe_en, accessed 25 June 2025.

[101]Abboud, Leila et al., “Macron Unveils Plans for €109bn of AI Investment in France”, Financial Times, 10 February 2025, https://www.ft.com/content/fc6a2d7a-5ed6-436e-84a5-dda86fc258d3, accessed 25 June 2025.

[102]Science Business, “Germany Promises Huge Boost in Artificial Intelligence Research Funding and European Coordination”, Science Business, https://sciencebusiness.net/news/ai/germany-promises-huge-boost-artificial-intelligence-research-funding-and-european, accessed 25 June 2025.

[103]EU AI Watch, “Netherlands AI Strategy Report”, European Commission, https://ai-watch.ec.europa.eu/countries/netherlands/netherlands-ai-strategy-report_en, accessed 25 June 2025.

[104]The White House, “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence”, The White House, 30 October 2023, https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence, accessed 25 June 2025.

[105]Wikipedia, “Executive Order 14110”, Wikipedia, 1 February 2025, https://en.wikipedia.org/wiki/Executive_Order_14110, accessed 25 June 2025.

[106]The White House, “Memorandum on Advancing the United States’ Leadership in Artificial Intelligence”, The White House, 24 October 2024, https://bidenwhitehouse.archives.gov/briefing-room/presidential-actions/2024/10/24/memorandum-on-advancing-the-united-states-leadership-in-artificial-intelligence-harnessing-artificial-intelligence-to-fulfill-national-security-objectives-and-fostering-the-safety-security, accessed 25 June 2025.

[107]NIST, “AI Risk Management Framework”, NIST, 12 July 2021, https://www.nist.gov/itl/ai-risk-management-framework, accessed 25 June 2025.

[108]The White House, “Public Comment Invited on Artificial Intelligence Action Plan”, The White House, 25 February 2025, https://www.whitehouse.gov/briefings-statements/2025/02/public-comment-invited-on-artificial-intelligence-action-plan, accessed 25 June 2025.

[109]European Commission, “Third Draft of the General-Purpose AI Code of Practice”, European Commission, https://digital-strategy.ec.europa.eu/en/library/third-draft-general-purpose-ai-code-practice-published-written-independent-experts, accessed 25 June 2025.

[110]China Law Translate, “Interim Measures for the Management of Generative AI Services”, China Law Translate, 13 July 2023, https://www.chinalawtranslate.com/en/generative-ai-interim, accessed 25 June 2025.

[111]UK Government, “AI Safety Summit 2023”, GOV.UK, 28 April 2025, https://www.gov.uk/government/topical-events/ai-safety-summit-2023, accessed 25 June 2025.

[112]AI Security Institute, “The AI Security Institute (AISI)”, AI Security Institute, https://www.aisi.gov.uk, accessed 25 June 2025.

[113]UK Parliament, “Artificial Intelligence (Regulation) Bill [HL]”, UK Parliament, https://bills.parliament.uk/bills/3942, accessed 25 June 2025.

[114]Parasnis, Sharveya, “Bank of China Announces 1 Trillion Yuan AI Industry Investment”, Medianama, 28 January 2025, https://www.medianama.com/2025/01/223-bank-of-china-announces-1-trillion-yuan-ai-industry-investment, accessed 25 June 2025.

[115]Euractiv, “Von der Leyen Launches World’s Largest Public-Private Partnership to Win AI Race”, Euractiv, https://www.euractiv.com/section/tech/news/von-der-leyen-launches-worlds-largest-public-private-partnership-to-win-ai-race, accessed 25 June 2025.

[116]Rankin, Jennifer, “EU to Build AI Gigafactories in €20bn Push to Catch Up with US and China”, The Guardian, 9 April 2025, https://www.theguardian.com/technology/2025/apr/09/eu-to-build-ai-gigafactories-20bn-push-catch-up-us-china, accessed 25 June 2025.

[117]OpenAI, “Announcing the Stargate Project”, OpenAI, https://openai.com/index/announcing-the-stargate-project, accessed 25 June 2025.

[118]Council of Europe, “The Framework Convention on Artificial Intelligence”, Council of Europe, https://www.coe.int/en/web/artificial-intelligence/the-framework-convention-on-artificial-intelligence, accessed 25 June 2025.

[119]UK Government, “AI Safety Summit 2023”, GOV.UK, 28 April 2025, https://www.gov.uk/government/topical-events/ai-safety-summit-2023, accessed 25 June 2025.

[120]French Government, “The AI Action Summit in Paris”, French Government, https://www.gouvernement.fr/en/the-ai-action-summit-in-paris-will-be-held-on-8-and-9-february-2025, accessed 25 June 2025.

[121]UK Government, “Global Leaders Agree to Launch First International Network of AI Safety Institutes”, GOV.UK, https://www.gov.uk/government/news/global-leaders-agree-to-launch-first-international-network-of-ai-safety-institutes-to-boost-understanding-of-ai, accessed 25 June 2025.

[122]United Nations, “Global Digital Compact”, United Nations, https://www.un.org/en/global-digital-compact, accessed 25 June 2025.

[123]Élysée Palace, “Statement on Inclusive and Sustainable Artificial Intelligence for People and the Planet”, Élysée Palace, 11 February 2025, https://www.elysee.fr/en/emmanuel-macron/2025/02/11/statement-on-inclusive-and-sustainable-artificial-intelligence-for-people-and-the-planet, accessed 25 June 2025.

[124]Euronews, “Why Experts Think the Paris AI Action Summit is ‘a Missed Opportunity’”, Euronews, 14 February 2025, https://www.euronews.com/next/2025/02/14/devoid-of-any-meaning-why-experts-call-the-paris-ai-action-summit-a-missed-opportunity, accessed 25 June 2025.

[125]Centre for Future Generations, “Policy Recommendations for the AI Action Summit Paris”, CFG, https://cfg.eu/wp-content/uploads/cfg-policy-recommendations-for-the-ai-action-summit-paris.pdf, accessed 25 June 2025.

[126]UK Government, “International AI Safety Report 2025”, GOV.UK, 18 February 2025, https://www.gov.uk/government/publications/international-ai-safety-report-2025#page=45, accessed 25 June 2025.

[127]CNAS, “Secure, Governable Chips”, Center for a New American Security, https://www.cnas.org/publications/reports/secure-governable-chips, accessed 25 June 2025.

[128]Centre for Future Generations, “Establishing AI Risk Thresholds: A Comparative Analysis Across High-Risk Sectors”, CFG, 9 January 2025, https://cfg.eu/establishing-ai-risk-thresholds-a-comparative-analysis-across-high-risk-sectors, accessed 25 June 2025.

[129]Anthropic, “Anthropic’s Responsible Scaling Policy”, Anthropic, https://www.anthropic.com/news/anthropics-responsible-scaling-policy, accessed 25 June 2025.

[130]Mistral AI, “Moderation”, Mistral AI, https://docs.mistral.ai/capabilities/guardrailing, accessed 25 June 2025.

[131]FedScoop, “OpenAI, Anthropic Enter AI Agreements with US AI Safety Institute”, FedScoop, 29 August 2024, https://fedscoop.com/openai-anthropic-enter-ai-agreements-with-us-ai-safety-institute, accessed 25 June 2025.

[132]Meta AI, “Introducing Llama 4”, Meta AI, https://ai.meta.com/blog/llama-4-multimodal-intelligence, accessed 25 June 2025.