Strengthening AI trustworthiness – Strategic innovation for European security part 3

This report is part of our project Strategic innovation for European security. It identifies ten emerging technologies the EU should invest in to safeguard its security and uphold European values in the face of rapid technological change, geopolitical instability, and economic uncertainty.

Amongst the ten technologies we identify, three of them fall within advanced AI.

Introduction

As a general-purpose technology, AI has the potential to influence every aspect of society[1]. While the productivity gains from AI may accelerate scientific discovery and economic efficiency substantially, it also raises pressing security challenges which demand urgent attention. In light of this, the future of Europe’s economic strength and national security hinges on harnessing the power of AI while guarding against misuse and unintended consequences.

Europe is currently lagging behind its geopolitical peers in computing infrastructure, capital investment, and talent acquisition for AI[2], but has taken steps to catch up with the InvestAI initiative, mobilising €200 billion including €20 billion for AI gigafactories in Europe[3]. With that, the EU has laid the foundation for developing safe, democratically aligned, and competitive AI systems in Europe – following calls for action from CFG and other organizations, further detailed in CERN for AI: The EU’s seat at the table[4] and Building CERN for AI: An institutional blueprint[5].

The EU has also made laudable progress towards regulating responsible AI development and integration through the EU AI Act – becoming the first major regulatory body in the world to comprehensively regulate on AI.

But capital investment and regulatory leadership are not enough to address the security challenges posed by the proliferation of AI. In order to ensure compliance with AI security standards and mitigate the broader risks of AI, the EU must innovate security mechanisms at both the software and hardware level.

Ensuring AI systems are trustworthy and secure is critical to widespread adoption, as well as a safety imperative. Nuclear power buildout suffered prolonged stagnation due to loss of public trust and increased regulatory barriers after incidents such as Chernobyl and Three Mile Island, only now showing signs of revival after decades in the wilderness[6]. Similarly, a high-profile AI-related disaster could trigger severe regulatory backlash and impede Europe’s AI growth trajectory. Europe must proactively build robust security measures to avoid catastrophic failures that could hinder adoption as well as put lives and economic prosperity at risk in order to fully realize the benefits of AI.

We have identified three technological initiatives for the EU to address these concerns.

First, investing in the development of Hardware Enabled governance Mechanisms (HEMs) could be crucial for safe AI, enabling global compute governance. HEMs can enable collaboration and trust by allowing for mutual verification of compliance with regulatory frameworks, amending global AI governance frameworks. This enables international treaties that in all likelihood will be a necessary step for geopolitical rivals to be able to coordinate on the responsible use of AI.

Secondly, investing in and facilitating the creation of an AI evaluations industry is a necessary component to the adoption of AI across Europe. The adoption of AI in the broader economy remains an issue of reliability, safety, and trustworthiness. By building the technology to ensure AI systems can be confidently deployed at scale, the EU can unlock large-scale adoption and productivity gains across the economy. Building on the EU AI act, this would further strengthen Europe’s position as a global player in AI governance and ensure the alignment to the EU’s democratic values.

Thirdly, investing in the development of AI-powered cybersecurity systems will boost the resilience of the EU’s critical digital infrastructure and address the rising cybersecurity threats introduced with new AI systems. In addition to empowering small scale aggressors, the advent of AI coincides with the most adversarial geopolitical climate since the end of the Cold War, where hybrid warfare including extensive cyber operations have become the new normal. As AI is poised to accelerate the offensive capabilities of malicious state and non-state actors to conduct cyberattacks, harnessing the power of AI to enhance our cybersecurity measures is a strategic and economically crucial imperative.

By investing in on-chip security hardware, trusted evaluation systems, and AI-powered cybersecurity, the EU can establish a leadership position within trustworthy AI.

Hardware Enabled governance Mechanisms

HEMs are mechanisms for governance embedded in AI semiconductor chips to allow for regulatory controls, enforcement, or compliance. The mechanisms can be implemented in both software and hardware on the AI chips. HEMs could be critical to allow for the global governance of AI chips, and complementary to already existing governance approaches. They could enable international transparency on chip usage as well as making it difficult to assemble chips into large clusters required for the most powerful AI systems, and potentially ensure any unauthorized tampering attempts will result in an unusable chip. By allowing for compute oversight or hardware limitations, the protection measures provide an enforcement and transparency mechanism to help ensure the safe use of AI, at home and abroad.

Introduction

The development of powerful AI systems under the conditions of geopolitical competition exacerbates safety risks and increases the likelihood of unintended consequences. Competing states have incentives to prioritize speed over safety in AI development, opening the door for ever-more powerful systems that could be used in destructive ways. As access to powerful AI systems proliferates among state and non-state actors, the lack of effective mechanisms to monitor and prevent accidents and misuse creates severe vulnerabilities[7].

If AI continues to be an arena of geopolitical competition, the world will need geopolitical adversaries to collaborate on its responsible use and development. One step in this direction has been the Council of Europe’s Framework Convention for Artificial Intelligence and Human Rights, Democracy and the Rule of Law, the first-ever legally binding international treaty regulating the use of AI by governments[8]. However, treaties will mean nothing if states have no way of ensuring each other’s compliance.

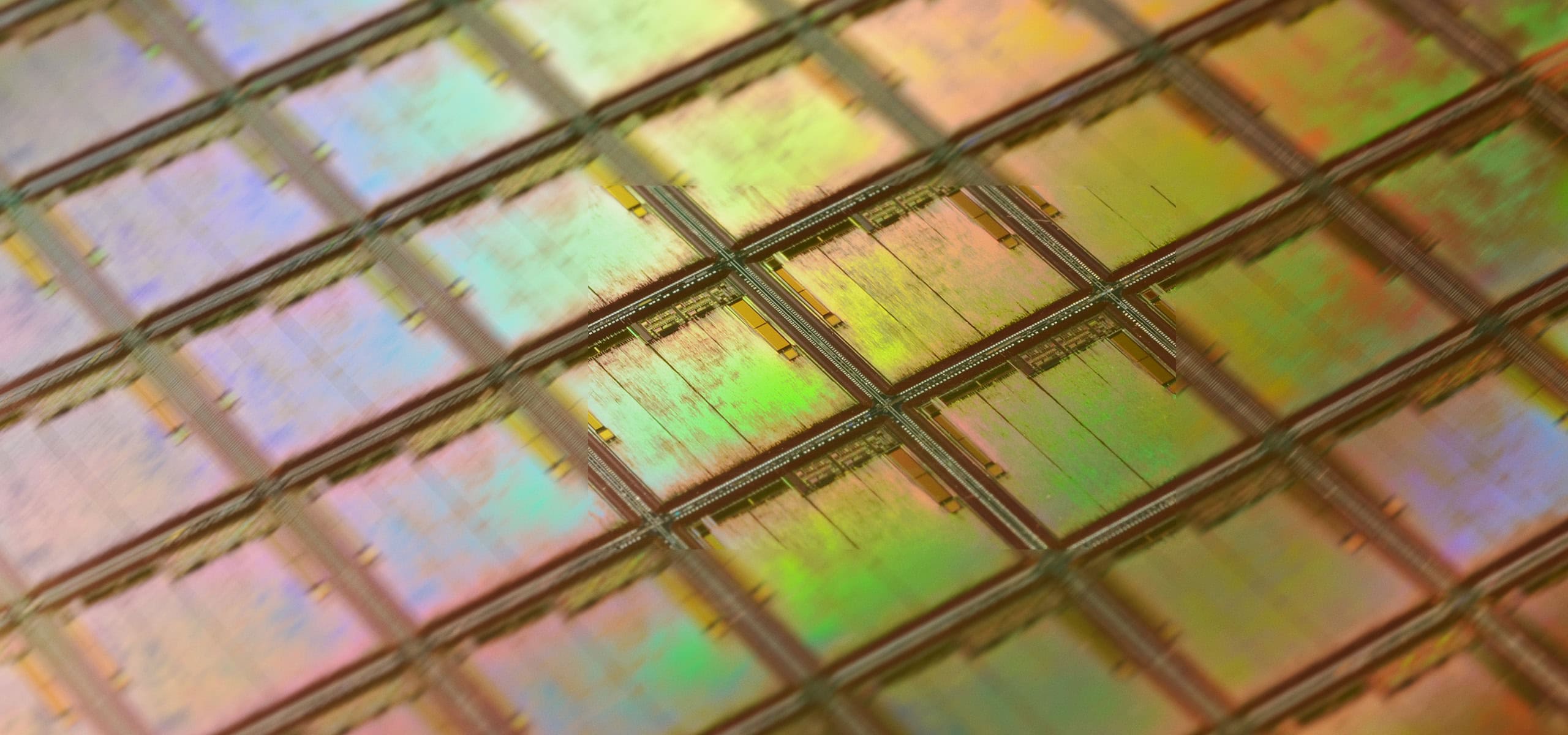

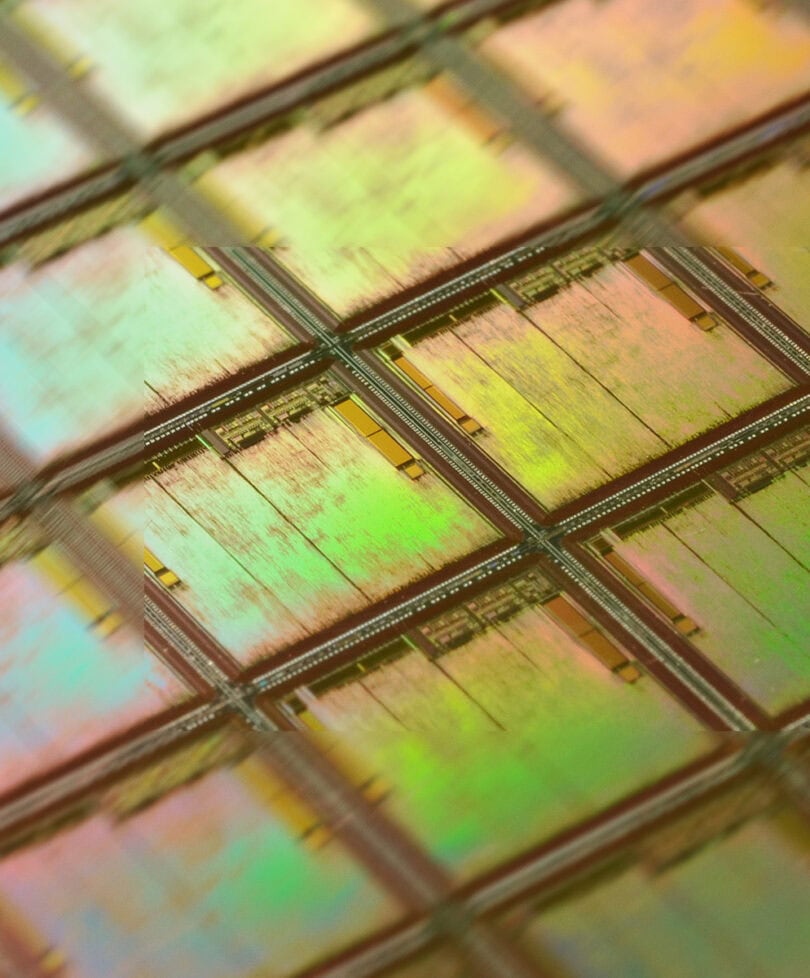

Compute governance is currently the most likely way to enable mutual accountability and transparency on AI[9]. An enabling factor of AI development has been the large-scale utilisation of high-throughput semiconductor chips (often referred to as “AI chips”), enabling massively scaled computing power. Compute will likely continue to serve as the “currency of AI” as it continues to be driven by scaling laws in training and inference[10]. Given the reliance of AI systems on AI chips, developing hardware mechanisms that enable insight and governance of chips can in turn enable international governance of AI.

HEMs could provide the necessary mutual insight between parties to build trust and enable international cooperation on the responsible use of AI, analogous to how the International Atomic Energy Agency has been able to build trust between states with neutral third-party inspections of their nuclear activities. While HEMs are an unproven and emerging technology that has both technological and regulatory uncertainties, they are among the best positioned to foster international cooperation and governance of AI[11].

By investing in the design and development of HEMs, the EU can accelerate the development of a critical technology and gain a presence in a rapidly growing global AI chip industry.

Security benefits

AI algorithms ultimately run on physical AI chips. This enables the possibility of hardware-based governance, as large numbers of chips with high computation throughput are required to run the most advanced and transformative AI systems.

There are already attempts to regulate the proliferation of AI chips through policy with the US AI Diffusion Framework[12], and we are also seeing new ideas for compute governance systems that could allow for verification or enforcement through HEMs[13]. Current approaches for solutions include the FlexHEG[14], but there are currently no complete solutions.

It’s important to remember that the world has successfully managed high-risk technologies under adversarial geopolitical conditions before. Within nuclear science, international cooperation facilitates peaceful applications of the technology. Crucially, nuclear diplomacy relies not just on treaties but on the International Atomic Energy Agency (IAEA) and their independent inspections.

Like nuclear science, AI is associated with high risks and will require international cooperation in order to be adopted safely. Just as the IAEA has repeatedly been able to build confidence between states, information-sharing and transparency mechanisms governing compute usage can be the technological bedrock of these treaties for the safe development and use of AI.

HEMs could enable the mitigation of risks in AI development and utilization, allowing authorities to verify each other’s compute usage and detect heavy training or inference activities both within and outside its borders. This would make it significantly harder for rogue actors or geopolitical adversaries to develop advanced AI systems outside of monitored channels, as the monitored AI chips themselves would make unauthorized usage evident. By building these security measures directly into the chips rather than relying on policy frameworks alone, we can create robust technical barriers making sure that AI development happens safely and remains visible to relevant authorities. Additionally, increased transparency can allow for increased trust among allies, facilitating their use among otherwise friendly partners. It could for example allow for exports to “Controlled-Access countries” in the U.S. AI Diffusion Framework, 17 of which are in the EU.

While promising, HEMs should not be seen as a silver bullet for global AI governance, as there are several valid concerns about this approach[15]. First, in order to ensure the mechanism addresses the problem at hand, it should be part of a comprehensive threat model. Second, it might not be possible to make the HEMs secure against circumvention. Third, reasonable privacy and security considerations might make the chips unusable for a risk-averse actor. While HEM technologies aim to address this, there are no guarantees that they will be addressed in time for adoption given the long lead time for chip design and manufacturing.

If these challenges are addressed, HEMs could enable global compute governance – where chips themselves become the focal point of policy verification and enforcement.

Economic benefits

While uncertain, HEMs could potentially become mandatory for participation in the rapidly growing global AI chip market. The GPU industry is estimated to be worth €90 billion in 2025 yearly revenue, and is projected to grow to €1.3 trillion by 2034[16]. For instance, assuming that the HEMs market – as a supporting industry – can capture a single digit percentage (1-6%) of this broader market, it would gain a global market of between €13 and €75 billion by 2034. Given the high geographical concentration in other segments of the supply chain, a market leader in HEM technology could potentially take a globally controlling position in a potentially vital aspect of the AI chip market. While highly dependent on regulatory and technological uncertainties, this presents a significant opportunity for the EU.

There are significant regulatory uncertainties, in addition to the technological uncertainties, from the global cooperation required to drive demand for HEMs. Firstly, the demand for HEMs must be driven from super-national regulatory requirements on compute and chip governance[17]. Without calls for strict transparency protocols through HEMs in a significant share of the market, the technology will have negligible impact on chip availability and be an ineffective tool for governance.

Secondly, the technology itself is not yet proven and could potentially eventually be ineffective due to either (a) vulnerabilities to circumvention, (b) prohibitive costs of implementation[18].

Europe’s position

The EU has the opportunity to lead in building this nascent technology in the semiconductor supply chain by being an early mover, but success requires international cooperation with US partners on global regulation, standards, tech development, and implementation in relevant products. Developing the technology requires targeted action and collaboration – most major AI chip designers are headquartered in the US and UK – and the EU currently lacks concentration of technical talent in this domain despite housing several companies in non-AI chip design.

While Europe is lacking capabilities in AI chip design, it already has a critical position in the chip supply chain through ASML. Expanding this position into HEMs is a strategic opportunity, but addressing the existing gap in chip-design expertise is challenging. It will require targeted action, especially given the difference in expertise required across the AI chip supply chain and the current US dominance through Nvidia and AMD.

Europe is in a unique position to build the first secure datacenters to drive initial demand of HEMs and build the safety infrastructure required to resist sabotage from external or internal actors, as well as providing transparency to allies on the usage of AI chips. This could drive the initial demand for HEMs domestically, and provide a catalyst for the industry. Furthermore, it could serve as a first demonstration of the safety and transparency features of HEMs in modern AI datacenters.

The proposed CERN for AI‘s hardware research track could provide an ideal institutional foundation for developing this technology while attracting and retaining the necessary technical talent, giving Europe a seat at the table in shaping international regulations on compute governance.

AI evaluations industry

The AI evaluations industry encompasses the emerging sector of third-party actors which test, audit, and certify AI models for safety, reliability, and compliance. With increasing complexity of advanced AI systems, so increases the complexity of technical solutions required to evaluate them. Building robust evaluation systems requires significant R&D into new solutions able to evaluate the safety of frontier AI capabilities, a perpetually moving target. As AI models are further integrated into society, we must build the technology to evaluate AI models against unintended behaviours, misuse, and noncompliance with regulations and ethical norms. By independently verifying the safety and reliability of new AI models, the AI evaluations industry will facilitate the integration of AI into more industries and systems, and build trust amongst users, developers, and governments.

Introduction

As AI permeates high-stakes industries like law, healthcare, and finance, it becomes crucial to independently evaluate these systems to verify that they are safe, reliable, and comply with our values. With increased capabilities and rates of adoption comes increased risks of reliability and safety. The AI evaluations industry will play a critical role in ensuring that our increasing cross-sectoral reliance on AI systems is safe and compatible with legal frameworks and ethical norms.

Independent evaluations of AI systems is critical to unlock the productivity gains of AI through adoption across society. An industry of trusted third-party evaluators will help ensure private companies have the confidence to deploy AI in their operations, and enable the associated productivity gains. Additionally, it will support regulators’ mission to avoid abuse and catastrophes.

The EU’s regulatory expertise puts Europe in a unique position to lead in this emerging field. By building both the regulatory and technical foundations now, Europe can gain a leading position within the emerging AI evaluations industry, ensure that AI systems behave as intended aligned with European values, ensure European companies scale in a global market with substantial growth, and enable companies and governments to reap the economic benefits of increased adoption. Building the technical tools to support this regulation is a significant challenge, and, by setting the regulatory framework, we can incentivise European companies to build these missing solutions.

This industry would encompass private companies conducting trustworthiness assessments, bias detection, compliance verification, and certification. This industry would be foundational to unlocking the productivity benefits of AI – analogously to how financial audits crucially enable capital markets – and support the European AI Office’s implementation of the AI Act[19].

With maturing AI models and evaluators, evaluations require increasing levels of automation. Already, AI evaluations are being automated both in labs and academia, and the primary way to scale trustworthy evaluations is by continued automation using other generative AI models[20]. In short, evaluating AI models is a technology challenge in itself. A proper regulatory framework must be put in place to align incentives, but the evaluation procedures themselves are deeply technical and are the subject of state-of-the-art AI research irrespective of any further policy interventions[21].

Security benefits

The integration of AI systems in society carries with it substantial risks of both misuse and unintended consequences[22]. As increasingly powerful systems are deployed across critical sectors – including finance, healthcare, insurance, and the legal system – misspecified or misused systems can shut down critical infrastructure and lead to loss of life as well as livelihoods. From rallies in financial markets to AI-based healthcare evaluations, there are already examples of AI behaving in ways not explainable or to the intention of its users.[23] A sound and trustworthy AI evaluations industry is therefore a crucial element in ensuring the safety and efficacy of the AI transition. Without thorough evaluations of these solutions, commercial use-cases of the technology might be significantly limited.

In addition to ensuring the needed trustworthiness of specialized systems, an evaluation industry can ensure the alignment of foundation models with legal frameworks and democratic norms by outside auditors. The increase in AI capabilities and use drive the risk of misaligned AI, giving audit and security assurance ever increasing importance.

Currently, evaluations of the trustworthiness of AI systems are predominantly done by the developers themselves, which creates a clear conflict of interest. In the few cases of voluntary access given to third party evaluators, the auditor is incentivised to be less stringent in order to maintain access and preserve their relationship with the developer. In order for AI evaluations to reliably serve their purpose, there is a need for an evaluations industry that is independent from the developers of AI systems and backed by clear and enforceable regulations.

Economic benefits

The global AI Assurance Technology market – which includes a broad range of technologies in AI safety and governance – is projected to reach €245bn in 2030, with significant uncertainty ranging from €81bn to €810bn in low and high scenarios[24]. In the base scenario, if the AI evaluations industry – comprising trustworthiness assessments, bias detection, compliance verification, and certification – comprises around a third of this market[25], its yearly expected revenue will be €80 billion, and correspond to around 3-6% of the projected global AI market of €1,5tn–€3tn by 2030[26]. Though large, there is uncertainty around the eventual size of the nascent AI evaluations industry, as its growth will be highly dependent on to what degree private companies and governments require AI evaluations and certification standards.

Critically, the AI evaluations industry will enable safe and trustworthy adoption of AI in the EU, which itself will yield substantial returns. European organisations are currently lagging behind their American counterparts in AI adoption rate with 45-70%[27]. By accelerating the development of an AI evaluations industry, the EU can expedite the adoption of trustworthy systems across its industries, improving economic productivity within the Union on the whole.

Without trustworthy, independently validated AI systems, the EU will not be able to reap the potentially game changing benefits of AI automation. As trust is critical for the adoption of AI systems[28], the EU will only be able to reap the potentially societally transformative benefits[29] of AI if it can establish the trustworthiness of these systems.

Europe’s position

Building the AI evaluations industry can be driven by both regulatory foundations and development of technical solutions. In terms of regulatory foundations, the European AI strategy has as its central aim to make the EU a leader in human-centric and trustworthy AI[30]. With initiatives like the AI Act and the AI Office, the EU has made a credible regulatory push to become a global leader in the AI evaluations industry. Building on this foundation, the EU can include specific and transparent certification standards as part of its regulatory framework for AI, as companies providing AI safety evaluations will only benefit once certifications become recognised across Europe.

In addition to the regulatory groundwork, Europe must drive the technical solutions to perform state-of-the-art AI evaluations. It is crucial to ensure that the right talent has access to build the right solutions. As such, the EU can draw on its talent pools but needs to make sure that both the commercial incentives and talent attractors are in place to drive the development of the industry. As with the building of CERN for AI[31], Europe can build the talent attractors with the right funding models and targeted investment.

Drawing on experience from the regulation and auditing of other high-impact industries like finance and banking, the EU can build and accelerate the growth of an AI evaluations industry that will facilitate safe AI adoption throughout its economy. Just as auditing and banking have become global markets with established international companies, the EU has the potential to set the benchmark in AI evaluations and grow the next global service providers in the space. By building predictable regulation and the right financial incentives to attract the right talent, the EU can lay the foundation for becoming a leader in the global evaluation and certification industry of AI.

AI-driven cybersecurity

AI-driven cybersecurity encompasses a new wave of security solutions leveraging powerful AI systems to defend against cyber threats. Due to the rise of AI in cyber-offensive operations, traditional cybersecurity approaches struggle to keep pace with cyber threats. AI-driven security solutions offer powerful new capabilities for threat prevention, detection, and response across all sectors. These solutions include using generative AI to find and address security issues, perform penetration testing of systems, anomaly detection, as well as defensive AI agents that respond to attacks in real-time. The integration of AI into cybersecurity enables more proactive defence mechanisms that can anticipate and adapt to emerging threats rather than merely reacting to known attack patterns, and might form the backbone of future cyber defence.

Introduction

The cybersecurity landscape is rapidly shifting as AI is contributing to a rapid growth in cyber risks at a low cost. Simultaneously, AI creates extraordinary opportunities for enhancing cybersecurity across institutions through automated detection and response capabilities. To keep up with increasing cyberoffensive capabilities, cyberdefensive actors must address the security issues presented by this new reality.

Today, increasing geopolitical tensions combine with extensive reliance on digital infrastructure and the rapid adoption of emerging technologies to escalate the cybersecurity landscape. In meeting with this reality, the EU must leverage the best available technologies in order to manage cyber risks as effectively as possible.

Cyberattacks are already increasingly prevalent. Current AI systems are enabling large scale phishing, deepfake scams, automated disinformation campaigns, and highly targeted social engineering attacks. Malicious actors target US Senators by impersonating Ukrainian officials[32], and are being used in voice-scams for both unauthorized access to systems as well as simpler “grandparent scams” where scammers impersonate family members, often grandkids, in dire situations asking for money[33]. Critical infrastructure, such as electrical grids, have already been the successful targets of cyberattacks[34]. Increasingly, AI enables cybercriminals to attack critical systems at scale[35]. As AI agents increase their independence and ability to perform cyberattacks, we must expect attacks to be cheaper and more accessible to all adversaries, opening the door for increased utilization[36].

By proactively investing in AI-based cybersecurity, the EU can better ensure the security of critical infrastructure across financial systems, healthcare, energy, and transportation that otherwise remains vulnerable to increasingly sophisticated attacks. As emphasised by the WEF’s Global Cybersecurity Outlook 2025, policy makers must leverage the power of AI in order to deprive attackers of their technological advantage[37]. Investments made today in AI-powered cybersecurity solutions will pay off substantially in averted losses, as well as aid the positioning of EU companies within a profitable and growing industry.

Security benefits

Cyber-attacks continued to grow in complexity, number, and intensity in recent years. Global losses from cybersecurity breaches amounted to €8.2 trillion in 2024, a figure which is projected to grow to €14 trillion by 2030 growing at a 9% CAGR[38].

AI-enabled cyber operations will enable offensive and defensive actors to scale operations to unprecedented levels, and traditional cybersecurity measures struggle to keep up with the changing nature of AI cybersecurity[39]. Whereas manual analysis, rule-based systems, and signature-based detection are effective against many known threats, they are often inadequate against novel sophisticated attacks. With the massive increase in potential malware samples from AI, forensics teams have a larger surface to analyse and lower odds of finding the novel sophisticated attacks per sample. In order to defend against cyber attacks, security operations must make use of AI systems at scale to shore up vulnerabilities, detect, and defend against attacks. Defensive AI involves using “red-teaming” with offensive AI systems to identify vulnerabilities, and we must expect any defensive actors to develop the ability to run offensive operations to a sufficient degree on their own systems, but not act on it. As such, it is crucial for the EU to develop this capability domestically.

Further, the increasing availability of sophisticated AI models is now lowering the barriers to entry for cyber-attacks, reducing costs and required expertise. By streamlining the process of identifying vulnerabilities and deploying malware, AI-powered cyber-attacks further allow for a speed and scale of attacks which would otherwise be impossible. The shortened time from reconnaissance to exploit and increased scale of attacks increase the importance of being able to detect first signs of an attack. While enhancing traditional security practices is critical, the novel nature and scale necessitates a corresponding AI-powered enhancement of defensive capabilities.

AI-powered cybersecurity can leverage machine learning and AI agents to analyze vast amounts of data in real-time and act on it at a scale that was previously prohibitively expensive. This approach allows for proactive defence mechanisms, as AI systems can predict potential vulnerabilities based on historical data and adapt to emerging threat landscapes quickly[40]. AI-driven solutions offer enhanced scalability, efficiently processing large datasets without a proportional increase in resources, thereby reducing operational costs and reliance on manual intervention[41]. This cost efficiency can increase cyber capabilities across the board and make them accessible to a larger number of actors who currently cannot afford to hire human cybersecurity professionals. This can address the 86 % of Europe’s small enterprises (10-49 employees) without even a single in‑house IT specialist[42], emphasised by 55 % of security professionals warning that staffing shortages already place their organisations at significant risk[43].

Lastly, AI can contribute to shoring up the software running on critical infrastructure, allowing for formal verification algorithms to be implemented to ensure the security of critical infrastructure. Already, the International Energy Agency urged Ukraine to “bolster physical and cyber security” of its energy infrastructure as the number one priority[44]. Verification as well as highly automated bug- and vulnerability testing would make it harder for destructive adversaries to disrupt the normal functioning of for example the electricity grid, water systems, and financial systems.

Economic benefits

The global cybersecurity market was worth €218bn in 2024 and is projected to reach €440bn yearly in 2030[45], with AI-powered cybersecurity comprising approximately 20% at €90bn yearly[46]. By building out Europe’s cybersecurity solutions, the EU can take a significant market share in this rapidly growing industry.

Additionally, AI-enhanced security solutions could significantly reduce economic losses from cyber attacks, which currently cost in the trillions of Euros annually[47]. This will only increase as the world continues its digitalization[48]. AI based cybersecurity is crucial in addressing these issues, and can lower operational costs by automating significant parts of the labor-intensive monitoring and response required in modern businesses[49]. Robust AI cybersecurity builds trust and can accelerate safe digital transformation across industries, especially in sensitive domains such as financial services and healthcare.

Europe’s position

The cybersecurity market is fragmented, but as the industry matures it could consolidate around AI-driven capabilities. US companies are currently leading in investment in cybersecurity, but Europe has the opportunity to challenge this position as AI presents a force for renewal[50]. The EU has an opportunity to grab significant market share in this market by drawing on its highly educated population and ensuring sufficient investment and consolidation opportunities.

The EU AI Act supports significant market demand for effective, compliant cybersecurity solutions. This gives European companies a potential advantage in their home market, as well as being a competitive advantage in the reputation of European cybersecurity companies abroad. The local regulatory framework can serve as a foundation for developing AI- and cybersecurity-capabilities that meet and exceed global standards which will position European firms for international expansion.

Europe further possesses strong educational infrastructure for developing cybersecurity talent, but currently lacks the concentration of specialized expertise found in leading technology hubs. As explored in general terms in Building CERN for AI[51], targeted investment in AI and cybersecurity could enable Europe to build the talent pipeline necessary to compete globally while securing European and global resilience.

We’ll publish part 4 of this series soon. In the meantime, read part 2 on climate security.

Endnotes

[1] Janků, S., Reddel, F., Reddel, M., Graabak, J. Beyond the AI Hype: A critical assessment of AI’s transformative potential. Centre for Future Generations, 2025. https://cfg.eu/beyond-the-ai-hype/

[2] European Commission: EISEMA. ‘The EU invests in artificial intelligence only 4% of what the U.S. spends on It’. Newsroom, 2025. https://ec.europa.eu/newsroom/eismea/items/864247/en (accessed 3 April 2025)

[3] European Commission. ‘EU launches InvestAI initiative to mobilise €200 billion of investment in artificial intelligence’, 2025. https://ec.europa.eu/commission/presscorner/detail/en/ip_25_467 (accessed 3 April 2025)

[4] Juijn, D., Pataki, B., Petropoulos, A. & Reddel, M. CERN for AI: The EU’s seat at the table. Centre for Future Generations, 2024. https://cfg.eu/cern-for-ai-eu-report/

[5] Petropoulos, A., Pataki, B., Juijn, D., Janku, D. & Reddel, M. Building CERN for AI: An institutional blueprint. Centre for Future Generations, 2025. https://cfg.eu/building-cern-for-ai/

[6] Nuclear electricity production increased at a 26% CAGR in 1965-1979 before the three mile island incident (1979) and Chernobyl incident (1986), subsiding to a rate of 1% CAGR in 1986-2023: Our World in Data. ‘Nuclear Energy Generation’, 2024. https://ourworldindata.org/grapher/nuclear-energy-generation (accessed 3 April 2025)

[7] Janku, D., Reddel, M., Yampolskiy, R. & Hausenloy, J. We have no science of safe AI. Centre for Future Generations, 2024. https://cfg.eu/we-have-no-science-of-safe-ai/

[8] Janku, D., Reddel, M., Yampolskiy, R. & Hausenloy, J. We have no science of safe AI. Centre for Future Generations, 2024. https://cfg.eu/we-have-no-science-of-safe-ai/

[9] Sastry, G., Heim, L. et al. ‘Computing Power and the Governance of Artificial Intelligence’, arXiv:2402.08797, 2024. https://doi.org/10.48550/arXiv.2402.08797 ; Aarne, O., Fist, T. Withers, C., ‘Secure, Governable Chips’, Center for a New American Security, 2024. https://www.cnas.org/publications/reports/secure-governable-chips (accessed 3 April 2025)

[10] Fridman, L. ‘Sam Altman: OpenAI CEO on GPT-4, ChatGPT, and the Future of AI’, Lex Fridman Podcast, 2023, Episode 37. https://lexfridman.com/sam-altman-2-transcript/ (accessed 3 April 2025)

[11] Kulp, G. et al., Hardware-Enabled Governance Mechanisms: Developing Technical Solutions to Exempt Items Otherwise Classified Under Export Control Classification Numbers 3A090 and 4A090, RAND, 2024. https://www.rand.org/pubs/working_papers/WRA3056-1.html

[12] Heim, L. Understanding the Artificial Intelligence Diffusion Framework: Can Export Controls Create a U.S.-Led Global Artificial Intelligence Ecosystem?, RAND, 2025. https://www.rand.org/pubs/perspectives/PEA3776-1.html

[13] Heim, L., Aderljung, M., Bluemke, E. & Trager, R. ‘Computing Power and the Governance of AI’, Centre for the Governance of AI, 2024. https://www.governance.ai/analysis/computing-power-and-the-governance-of-ai ; Kulp, G. et al., Hardware-Enabled Governance Mechanisms: Developing Technical Solutions to Exempt Items Otherwise Classified Under Export Control Classification Numbers 3A090 and 4A090, RAND, 2024. https://www.rand.org/pubs/working_papers/WRA3056-1.html

[14] Petire, J., Aarne, O., Ammann, N. & Dalrymple, D. ‘Interim Report: Mechanisms for Flexible Hardware-Enabled Guarantees, Institute for AI Policy and Strategy’, 2024. https://yoshuabengio.org/wp-content/uploads/2024/09/FlexHEG-Interim-Report_2024.pdf

[15] Heim, L. ‘Limitations for AI Hardware-Enabled Mechanisms’, 2024. https://blog.heim.xyz/considerations-and-limitations-for-ai-hardware-enabled-mechanisms/ (accessed 3 April 2025)

[16] Precedence Research. ‘Graphic Processing Unit (GPU) Market Size | Share and Trends 2025 to 2034’, 2025. https://www.precedenceresearch.com/graphic-processing-unit-market (accessed 3 April 2025)

[17] Kulp, G. et al., Hardware-Enabled Governance Mechanisms: Developing Technical Solutions to Exempt Items Otherwise Classified Under Export Control Classification Numbers 3A090 and 4A090, RAND, 2024. https://www.rand.org/pubs/working_papers/WRA3056-1.html

[18] Kulp, G. et al., Hardware-Enabled Governance Mechanisms: Developing Technical Solutions to Exempt Items Otherwise Classified Under Export Control Classification Numbers 3A090 and 4A090, RAND, 2024. https://www.rand.org/pubs/working_papers/WRA3056-1.html

[19] European Commission. ‘European AI Office’, 2025. http://digital-strategy.ec.europa.eu/en/policies/ai-office (accessed 3 April 2025)

[20] See published research by Apollo Research: Apollo Research, 2025. https://www.apolloresearch.ai/research (accessed April 3 2025).

[21] Anthropic. ‘Anthropic’s Responsible Scaling Policy’, 2023. https://www.anthropic.com/news/anthropics-responsible-scaling-policy (accessed April 3 2025)

[22] Benio, Y. et al. International AI Safety Report: The International Scientific Report on the Safety of Advanced AI. AI Action Summit, 2025. https://assets.publishing.service.gov.uk/media/679a0c48a77d250007d313ee/International_AI_Safety_Report_2025_accessible_f.pdf

[23] AIID. ‘AI Incident Database’, 2025. https://incidentdatabase.ai/

[24] AIAT. Risk & Reward: 2024 AI Assurance Technology Market Report, 2024. https://www.aiat.report/report/about

[25] AIAT. Risk & Reward: 2024 AI Assurance Technology Market Report, 2024, Appendix E. https://www.aiat.report/report/about

[26] Grand View Research. ‘Global Artificial Intelligence Market Size & Outlook’, 2025. https://www.grandviewresearch.com/horizon/outlook/artificial-intelligence-market-size/global (accessed 3 April 2025) ; Epoch AI. ‘GATE – AI and Automation Scenario Explorer’, 2025. http://epoch.ai/gate#econ-growth (accessed 3 April 2025)

[27] Sukharevsky, A. et al. ‘Time to place our bets: Europe’s AI opportunity’, Mckinsey Global Institute, 2024. https://www.mckinsey.com/capabilities/quantumblack/our-insights/time-to-place-our-bets-europes-ai-opportunity (accessed 3 April 2025)

[28] Deloitte. ‘It’s time for Trustworthy AI’, 2025. https://www.deloitte.com/no/no/issues/trustworthy-ai.html (accessed 3 April 2025) ; AIID. ‘AI Incident Database’, 2025. https://incidentdatabase.ai/ (accessed 3 April 2025)

[29] Janků, S., Reddel, F., Reddel, M., Graabak, J. Beyond the AI Hype: A critical assessment of AI’s transformative potential. Centre for Future Generations, 2025. https://cfg.eu/beyond-the-ai-hype/

[30] European Commission. ‘European approach to artificial intelligence’, 2025. https://digital-strategy.ec.europa.eu/en/policies/european-approach-artificial-intelligence (accessed 3 April 2025)

[31] Petropoulos, A., Pataki, B., Juijn, D., Janku, D. & Reddel, M. Building CERN for AI: An institutional blueprint. Centre for Future Generations, 2025. https://cfg.eu/building-cern-for-ai/

[32] Merica, D. ‘Sophistication of AI-backed operation targeting senator points to future of deepfake schemes’. AP News, 2024. https://apnews.com/article/deepfake-cardin-ai-artificial-intelligence-879a6c2ca816c71d9af52a101dedb7ff (accessed 3 April 2025)

[33] Riley, D. ‘CrowdStrike report finds surge in malware-free cyberattacks and AI-driven threats in 2024’. Silicon Angle, 2025. https://siliconangle.com/2025/02/27/crowdstrike-report-finds-surge-malware-free-cyber-attacks-ai-driven-threats-2024/ (accessed 3 April 2025) ; Consumer and Governmental Affairs. ‘’Grandparent’ Scams Get More Sophisticated’, Federal Communications Commission, 2025. https://www.fcc.gov/consumers/scam-alert/grandparent-scams-get-more-sophisticated (accessed 3 April)

[34] US Department of Justice. ‘Six Russian GRU Officers Charged in Connection with Worldwide Deployment of Destructive Malware and Other Disruptive Actions in Cyberspace’, 2020. https://www.justice.gov/archives/opa/pr/six-russian-gru-officers-charged-connection-worldwide-deployment-destructive-malware-and (accessed April 3 2025)

[35] Williams, R. ‘Cyberattacks by AI agents are coming‘, MIT Technology Review, 2025. https://www.technologyreview.com/2025/04/04/1114228/cyberattacks-by-ai-agents-are-coming/ (accessed April 10 2025)

[36] Sabbagh, D. ‘Russia plotting to use AI to enhance cyber-attacks against UK, minister will warn’, The Guardian, 2024. https://www.theguardian.com/world/2024/nov/25/russia-plotting-to-use-ai-to-enhance-cyber-attacks-against-uk-minister-will-warn (accessed April 3 2025)

[37] Joshi, A., Moschetta, G. & Winslow, E. Global Cybersecurity Outlook 2025. World Economic Forum: Accenture, 2025. https://reports.weforum.org/docs/WEF_Global_Cybersecurity_Outlook_2025.pdf

[38] Statista. ‘Estimated cost of cybercrime worldwide 2018-2029’, 2025. https://www.statista.com/forecasts/1280009/cost-cybercrime-worldwide (accessed 3 April 2025) ; Santini, G. ‘A European outlook from the ISACA 2024 State of Cybersecurity Report’, EU Digital Skills and Jobs Platform, 2025. https://digital-skills-jobs.europa.eu/en/latest/news/european-outlook-isaca-2024-state-cybersecurity-report (accessed 3 April 2025)

[39] Darktrace. ‘Preparing for AI-enabled cyberattacks’, MIT Technology Review Insights, 2021. https://www.technologyreview.com/2021/04/08/1021696/preparing-for-ai-enabled-cyberattacks/ (accessed 3 April 2025)

[40] Prince U. N. et. al. ‘AI-Powered Data-Driven Techniques: Boosting Threat Identification and Reaction’, Nanotechnology Perceptions 20, 2024, pp.332-353. https://doi.org/10.13140/RG.2.2.22975.52644

[41] IBM. ‘Cost of a data breach Report 2024’, 2024. https://www.ibm.com/reports/data-breach

[42] Eurostat. ‘Digital economy and society statistics – enterprises’, 2025. https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Digital_economy_and_society_statistics_-_enterprises (accessed 3 April 2025)

[43] The International Information System Security Certification Consortium, ‘2024 ISC2 Cybersecurity Workforce Study’, 2024. https://digital-skills-jobs.europa.eu/system/files/2024-12/ISC2_Workfoce-Study-Findings-EU.pdf (accessed 3 April 2025)

[44] International Energy Agency. ‘Ukraine’s Energy Security and the Coming Winter’, 2024, p. 8, https://iea.blob.core.windows.net/assets/cec49dc2-7d04-442f-92aa-54c18e6f51d6/UkrainesEnergySecurityandtheComingWinter.pdf

[45] Grand View Research. ‘Cyber Security Market size & Trends’, 2024. https://www.grandviewresearch.com/industry-analysis/cyber-security-market (accessed 3 April 2025) ; Spherical Insights, ‘Global Cybersecurity Market Insights Forecast to 2030’, 2023. https://www.sphericalinsights.com/reports/cybersecurity-market (accessed 3 April 2025)

[46] Allied Market Research. ‘AI in Cybersecurity Market Statistics, 2032’, 2023. https://www.alliedmarketresearch.com/ai-in-cybersecurity-market-A185408 (accessed 3 April 2025)

[47] Statista. ‘Estimated cost of cybercrime worldwide 2018-2029’, 2025.

[48] Statista. ‘Estimated cost of cybercrime worldwide 2018-2029’, 2025 ; Ginerva Santini. ‘A European outlook from the ISACA 2024 State of Cybersecurity Report’, 2025.

[49] Vazquez, I. ‘Economic impact of automation and artificial intelligence’, WatchGuard, 2024. https://www.watchguard.com/wgrd-news/blog/economic-impact-automation-and-artificial-intelligence (accessed 3 April 2025)

[50] Statista. ‘Cybersecurity – Worldwide’, 2025. https://www.statista.com/outlook/tmo/cybersecurity/worldwide (accessed 3 April 2025)

[51] Petropoulos, A., Pataki, B., Juijn, D., Janku, D. & Reddel, M. Building CERN for AI: An institutional blueprint. Centre for Future Generations, 2025. https://cfg.eu/building-cern-for-ai/