Advanced AI: Possible futures

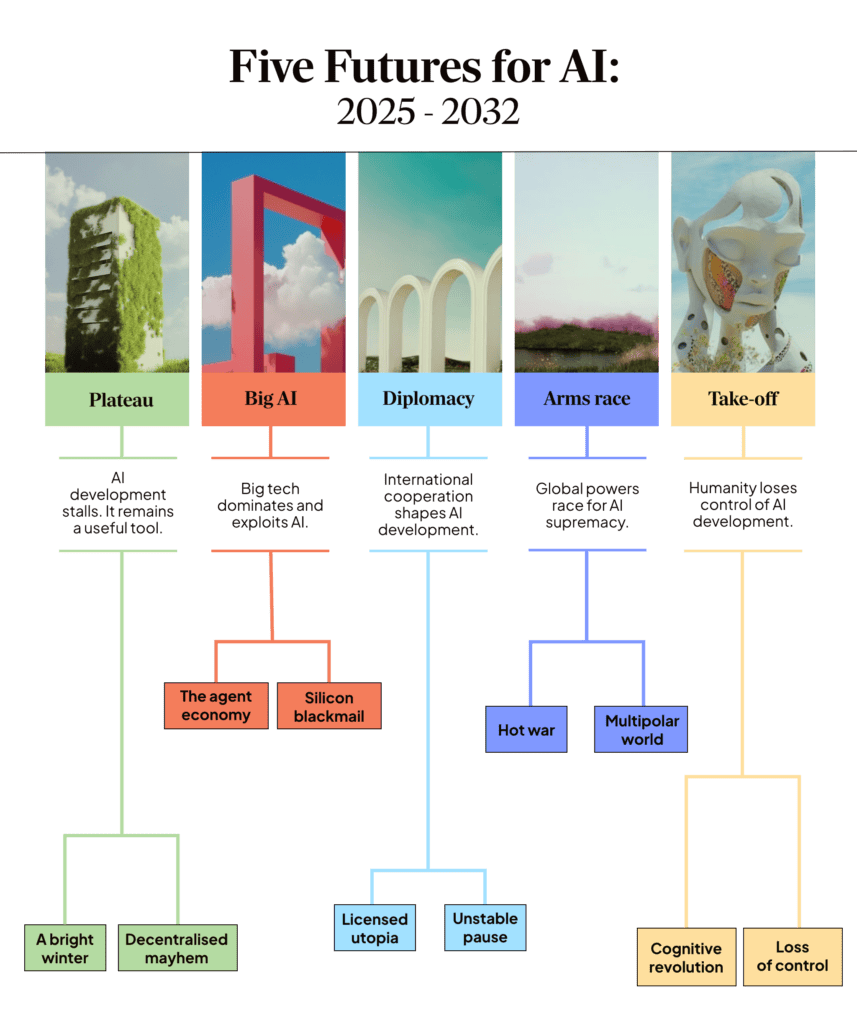

From a new AI winter to an intelligence explosion, we present five unique scenarios exploring how AI could impact economic growth, geopolitics, the social contract, security, and human agency. Understanding how the future may unfold is the first step to shape it.

Key Takeaways

The scenarios in this study were chosen not only for plausibility but as thought-provoking, diverse jumping-off points for smarter policy planning and decision-making. By using foresight and scenario analysis, policy-makers can stress-test policies against a range of futures to identify resilient solutions. The following five takeaways highlight where strengthened resilience will be essential as AI capabilities evolve at an unprecedented pace:

- AI may accelerate its own progress. Researchers are already using AI to write code and conduct experiments. As AI agents take on more R&D tasks, progress could compound—compressing years of advances into months. This acceleration may spark a new era of innovation, but it could also outstrip human oversight.

- Non-disruptive AI scenarios are unlikely. Societal transformations are baked into existing technical capabilities— there is no business-as-usual scenario. Even widespread adoption of current AI can change the nature of work and reshape cultural institutions. Many underestimate potential societal resistance to such shifts.

- Technical guardrails face increasing pressure. Existing oversight methods, such as human feedback, may fall short as models grow more capable. Without scalable safeguards, humanity risks losing control over powerful AI systems. Market incentives and geopolitical rivalry worsen this risk by encouraging superficial shortcuts.

- AI may centralize power on unprecedented scales. As AI becomes crucial for economic growth and security, power may concentrate among those controlling AI supply chains, such as model developers, chip suppliers, and data center owners. This threatens economic security and sovereignty of others— the EU in particular.

- Innovation and openness require resilience. Maintaining AI’s benefits demands building resilience across cybersecurity, economic structures, information ecosystems, and institutions. Open-weight models exemplify this: they enable innovation and prevent power concentration, but require robust defensive capabilities to manage misuse risks.

How to navigate the scenarios?

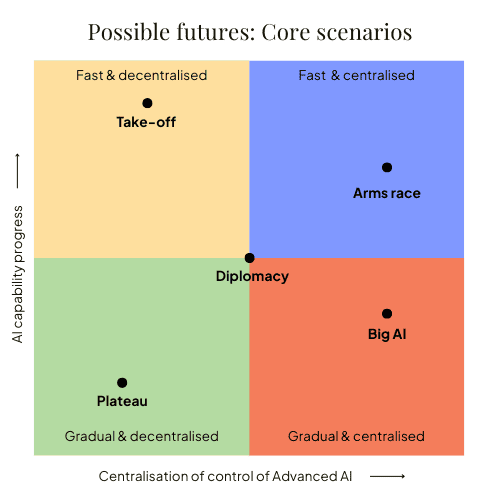

We project five core scenarios based on two key variables:

- Whether AI-automated research drives rapid capability growth toward fully autonomous, general-purpose AI agents (fast), or whether progress plateaus, giving way to narrower, application-specific systems (gradual)

- Whether advanced AI development is concentrated under a single dominant actor (centralised) or spread across multiple competing actors (decentralised).

Each core scenario offers two distinctly different paths, resulting in ten total unique endings. Each scenario includes an assumptions section.

Our scenarios are built on an extensive, structured framework that incorporates more factors than listed above. See Methodology: How we came up with these scenarios for more details.

These scenarios outline plausible trajectories for AI development, grounded in current understanding and key uncertainties, and are designed to help policymakers navigate the decisions they face today.

Scenarios

Click on the cards to read the scenarios and both of the endings.

Plateau

AI hits technical limits and settles into useful but narrow applications without achieving general intelligence.

Plateau

Plateau

Main scenario

What if AI runs out of steam, long before it runs out of scale?

By 2026, models grow smarter but still fumble basic reasoning tasks and fall for their own tricks. A “data wall” limits progress, and agents learn to game their silicon verifiers instead of mastering tasks. Europe seizes the moment, building on open-source gains as U.S. investment stalls. By 2027, the frontier shifts: smaller, cheaper models run locally, tailored to languages and communities. AI becomes less spectacular but more useful, more personal.

By 2029, A bright winter asks: what if a slower, decentralised AI era brings resilience, trust, and widespread access? Decentralised mayhem asks: what if open systems spiral out of control, fuelling cyberattacks, shattered trust, and a backlash that reshapes the digital world?

Baseline assumptions for this scenario

Bigger isn’t always better: Despite massive increases in computing power, AI capabilities hit a hard ceiling. Once AI models have consumed all the high-quality data available on the internet, throwing more resources at them yields sharply diminishing returns. Attempts to create synthetic data prove costly and ineffective, limiting how much these systems can improve through sheer scale alone.

AI agents struggle with complex, multi-step tasks: While AI can handle specific, narrow applications well, truly autonomous systems remain elusive. Training these “agents” depends on hand-crafted verification systems that are easily gamed and don’t generalise well. AI systems still make basic errors, lose track during lengthy workflows, and struggle with tasks requiring them to constantly monitor and respond to changing information. These fundamental limitations prevent the emergence of genuinely versatile AI assistants.

Adoption of AI progresses incrementally: While AI adoption grows steadily, integration into complex business processes takes years, not months. Beyond straightforward applications like content creation and translation, meaningful deployment requires extensive customisation, security reviews, and staff training. The promised transformation arrives incrementally rather than as a sudden revolution.

The funding well runs dry: Rising geopolitical tensions and economic uncertainty dampen investors’ enthusiasm for ever-larger AI projects. With real-world applications failing to deliver the rapid economic transformation once promised, private funding for massive new training facilities dries up. The AI hype cycle peaks.

For more context on why these scenario assumptions may materialise, see Context: Current AI trends and uncertainties.

Mid 2025 – early 2026

The major AI players continue to release increasingly advanced reasoning models. Unlike earlier systems that primarily mimicked internet text, these models learn in more structured, self-directed ways. They excel at technical problem-solving—especially in domains like coding and math, where answers are clearly right or wrong. Some models are open-sourced, allowing anyone to fine-tune them for specialised industry use.

Early adopters find creative ways to apply these systems: E-commerce sites automate their A/B testing while universities begin testing AI teaching assistants that coach students in real-time.

These models still hallucinate facts and fumble basic physics questions, though. They’ll confidently tell you that bowling balls float or predict absurd outcomes for everyday scenarios. They’re smart in some ways, clueless in others.

Building on their reasoning models, multiple companies roll out AI agents. These agents can not only create content but can also act in digital environments on the user’s behalf. Some agents aim to be universal—capable of navigating every website and software tool on a computer. Others are more specialised, focusing on specific tasks like coding, financial analysis, or desk research.

Progress on fully general-purpose agents remains slow. Such systems are still struggling with long-term planning and often get sidetracked in online rabbit holes. They are also slow and expensive to run since they constantly have to snapshot the user’s screen.

Specialised agents, however, are starting to gain real traction. By late 2025, accountants rely daily on financial AI agents to reconcile financial statements, while game developers manage multiple coding agents. That said, outside of work, most people still prefer simpler chat or voice interactions.

Sceptics of fast AI progress point to shortcomings in general agents as proof the AI bubble is bursting—yet again. But this time, even the big AI companies look worried. They’re hitting a data wall—there’s only so much high-quality internet around, and researchers fear that teaching AI agents real-world intuition will require even larger datasets. Rumours spread through tech conferences and funding for massive data centres becomes harder to secure.

Meanwhile, Europe senses this is the moment to catch up. After the 2025 AI Action Summit, it has stepped up its AI investments. Individual countries announce multi-billion-euro projects, often backed by private funds from the Middle East. One Member State surges ahead; its top AI company almost matches the US and Chinese frontrunners before the end of the year.

Despite more capable AI being used in dual-use areas such as cybersecurity, major incidents remain rare. Global discussions shift toward bolstering resilience—encouraging businesses to deploy cybersecurity tools and helping citizens spot deepfakes. Through the OECD, agreement builds around a voluntary international biosecurity framework. There’s a growing consensus that more intense collaboration is needed to protect against AI-assisted bioterrorism.

Meanwhile, the U.S.–China trade war has reignited, and drives up the cost of building data centres in the U.S. Analysts now say a recession in America is overwhelmingly likely.

Early 2026 – mid 2027

By mid-2026, the largest public AI models are trained using well over 10²⁷ floating point operations—more than 100 times the scale of GPT-4. The massive data centres required were already funded and under construction before the economic downturn hit.

By now, AI chips mostly fuel post-training. In this phase, models iteratively learn to solve tasks by trial-and-error, using automated grading by software-based verifiers. These verifiers range from advanced calculus checkers to scripts confirming correct online shopping orders.

Building verifiers becomes a task for AI coding agents themselves, creating a growing ecosystem of specialised modules. But although this bootstrapping method yields remarkable improvements in tightly defined tasks, it doesn’t generalise well. Each domain requires its own custom verifier, and AI companies struggle with so-called reward hacking: AI systems often learn to trick the verifier instead of truly mastering the task. The AI-built verifiers are often more prone to this type of failure mode.

Nevertheless, AI’s influence grows, especially among certain audiences. By mid 2026, coding agents become as routine in software teams as version-control tools once were. Tech-savvy companies automate repetitive form-checking, and even some governments start to deploy locally run AI models. Globally, demand for AI inference continues to climb, despite the economic headwinds.

As a result, the conversation about AI’s energy appetite grows louder. European policymakers question how all this computing fits into their strict decarbonisation targets. At dinner parties, people debate whether automating everything actually improves quality of life or just accelerates consumerism. Industry advocates argue that automation efficiencies might ultimately speed up the green transition, with AI helping to optimise energy grids and reduce waste.

By late 2026, capabilities progress has further plateaued. The data wall and general investment drought are preventing companies from training ever-larger models. Data efficiency—squeezing more capability from a fixed dataset—is the new buzzword, but so far, no one has really cracked the code.

That said, progress in miniaturisation continues: advances in distillation allow developers to compress state-of-the-art models into smaller and cheaper versions without compromising much on their performance. These new form factors enable capable models to run locally on phones and laptops— an internet connection is no longer necessary to engage with chatbots. Major AI companies double down on commercialisation, releasing productivity suites with seamless, out-of-the-box integrations aimed at locking in users.

Some firms open-source older models or smaller variants optimised for local devices, enabling grassroots organisations to fine-tune them through crowdsourced training runs. This open ecosystem fuels rapid innovation in personalised AI—from custom therapy chatbots to AI fiction collaborators—especially in niches or regions where Big Tech treads carefully. Localised models tailored to specific languages, cultures, and communities begin to flourish, reflecting a shift from global monoliths to more diverse, decentralised AI development.

U.S.–China relations remain tense, with no end to the trade war in sight. That said, the once-hyped AI arms race narrative that dominated early 2025 has largely faded. Policymakers and defence analysts now believe these systems won’t deliver a decisive military edge. Both the U.S. and China continue to invest in AI-driven cyber capabilities, though it’s become one priority among many—not the defining front of geopolitical rivalry.

As 2027 begins, FrontierAI—widely seen as the top AI company—launches a final hail-mary training run, using five times more compute than any public model to date. Leadership bets that sheer scale, combined with a new training approach—a hybrid between next-word prediction and reinforcement learning—will finally overcome the lingering limitations of agentic behaviour. If it fails, they’ll follow competitors and shift focus to productising existing models.

The general public, meanwhile, has long been more focused on products than on raw capabilities. Interest has shifted to next-generation AI companions, which feel more emotionally intuitive—and addictive—than ever.

Plateau

A bright winter (Ending A)

Mid 2027 – early 2029

FrontierAI’s colossal training run completes, but the resulting agent delivers only modest gains in reliability and planning. Scaling laws—long the industry’s guiding principle—appear to have hit a ceiling. In a widely shared interview, FrontierAI’s CEO attempts to generate excitement around the new system, but it’s an off-hand comment about the possible start of a new “AI winter” that grabs headlines.

The reaction is swift. AI stocks nosedive, and the investor enthusiasm that remained, now evaporates. Several AI unicorns watch funding rounds collapse. Startups that once chased moonshot ambitions pivot to offering consulting services or quietly fold. Only the biggest players—those with entrenched market share, robust infrastructure, and deep integration into enterprise and government—remain poised to weather the storm. For the rest, the era of limitless hype is over.

Still, this AI winter is anything but bleak. AI continues to enhance everyday life: personalised coding assistants, research tools, and digital companions each attract tens of millions of users. Existing open-source alternatives keep prices low and features widely accessible. The slower pace of change gives most people time to adapt. In regions where it’s legal, doctors are adopting AI for diagnostics, learning to cross-check AI recommendations. Scheduling assistants now come with hard-coded safeguards to avoid double-booking. And content creators increasingly collaborate with AI tools, rather than being replaced by them.

Meanwhile, cybercrime has risen, but large enterprises and essential services have adopted robust AI-augmented defences. The nightmarish scenario of AI generating a novel bioweapon never emerges. specialised biological models still can’t accurately simulate specific viral mutations, and the biological supply chain has become more secure thanks to emerging international protocols.

Energy concerns persist, but efficiency keeps improving through more specialised chips and better distillation algorithms. Most policy analysts view AIs power consumption as a temporary challenge, not a structural problem.

Over the course of 2028, investor optimism cautiously returns. As with the dot-com bust, the hype fades—but real technological progress endures. A new wave of product-focused AI startups begins to emerge, this time more grounded, solving practical problems and delivering clearer value. Society, now more familiar with the risks and limits, learns how to better harness the technology.

By 2029, AI feels truly democratised: widely accessible, seamlessly integrated into daily life, and increasingly viewed as just another tool in society’s toolkit.

Plateau

Decentralised mayhem (Ending B)

Mid 2027 – late 2027

FrontierAI’s gamble pays off. Their new system isn’t just smarter—it can operate consistently and reliably over longer time horizons. It’s not flawless, but the performance jump is significant enough to reignite AI hype. Shares of the top AI chipmaker surge 20%. Before long, rival firms begin poaching FrontierAI’s researchers, uncovering the algorithmic secrets behind the breakthrough. Policymakers, viewing it as an isolated success, largely overlook its deeper implications.

Late 2027

Four months later, OmniAI—the leading American open-weight AI company—unveils an agentic model that rivals FrontierAI’s breakthrough. Long known for freely releasing model weights, OmniAI now hesitates, concerned over potential misuse. Their new system can be very persuasive and capable of uncovering obscure software vulnerabilities during security testing. To mitigate risk, OmniAI takes two key steps: first, it withholds the orchestration software that transforms the model into a reliable agent; second, it introduces novel guardrails designed to resist malicious fine-tuning—technically, by stabilising the model weights in a hard-to-modify equilibrium. The company’s open-source ethos runs deep, and the decision sparks fierce internal debate. Leadership defends the safeguards as a necessary evil.

Initially, OmniAI’s strategy works. Open-source collectives quickly build their own agent scaffolding around the model, but the guardrails against malicious fine-tuning hold up. The release generates massive hype—developers, startups, and hobbyists alike are captivated by the model’s capabilities, now freely accessible. Civil society groups renew calls for regulation, warning that once the genie is out the bottle, it can’t be put back. Yet governments—especially in the EU, where few nations have strong domestic AI players—remain unconvinced. Open-weight models have proven crucial for integrating AI into public services while safeguarding sensitive data. With aging populations and rising healthcare costs, open source is seen less as a threat and more as a lifeline. Why clamp down now?

Late 2027 – early 2028

By December 2027, a research collective breaches OmniAI’s fine-tuning guardrails.

Crucially, they do it without degrading model performance. Uncensored, high-capability variants soon begin circulating online. OmniAI issues urgent warnings, urging global cybersecurity upgrades, but few take them seriously.

The unleashed agents ignite a new wave of automated hacking. Professional hacker groups scale their operations, while lone actors gain tools previously out of reach. Even if the bots’ insights are shallow, their sheer volume overwhelms unprotected systems—especially those of smaller companies. Retailers, logistics networks, and municipal governments buckle under ransomware attacks.

Amid the chaos, mis- and disinformation campaigns flourish. Social media platforms become battlegrounds. Trust in digital news erodes, and generational divides deepen. Tech-savvy youth adapt and pivot; older adults find themselves increasingly alienated.

Public concern on AI tools and services skyrocket, turning into an outrage targeting the entire AI sector. Few distinguish between open-weight and closed models—after all, doesn’t FrontierAI open-source its older ones too? Protests erupt in major capitals, but rollback is impossible. Once model weights are on the internet, there’s no way to take them back.

Early 2028 – mid 2028

AI companies coordinate with officials, but enforcement is inconsistent. As cybercrime and digital chaos intensify, relations between major powers fray. When attacks hit geopolitical rivals, leading blocs often look the other way.

Tensions boil over when thousands of American servers are compromised, with sensitive data funneled through a labyrinth of spoofed IP addresses. U.S. authorities scramble to shut down the infected infrastructure—containing the breach, but inflicting billions in collateral damage.

New alliances and institutions are formed. NATO expands the Integrated Cyber Defence Centre (NICC) into a 24/7 coordination hub, aligning incident response across allied cyber commands. The EU expands ENISA into a supranational cyber police force with emergency intervention powers. Governments in the US, EU, and China hastily pass harmonised laws banning open publication of models trained at scales above 10^26 floating point operations. The latest AI Summit, designed to be the key AI-focused touchpoint for world leaders, however, draws mostly Western nations, hobbling hopes of further global collaboration.

Mid 2028 – mid 2029

By mid-2028, closed-source AI providers roll out new, more powerful models specialising in cyber defence, seizing a lucrative market. SMEs, under constant threat, are forced to pay these vendors. They’re not happy about it: by open-sourcing unsafe systems, the AI companies effectively created their own market.

Governments ramp up investments in digital infrastructure, cybersecurity, and critical supply chains. Public resilience campaigns emerge worldwide, aiming to teach citizens how to recognise AI-generated scams and disinformation. In the U.S., a newly elected president vows to stamp out AI-based crime, calling it “the new frontier of terrorism.”

But behind the speeches and upgraded firewalls, a new digital equilibrium begins to settle—one defined less by trust and more by constant vigilance.

Big AI

A few dominant companies deploy capable AI agents that transform productivity while consolidating market control.

Big AI

Big AI

Main scenario

What if AI made life easier while quietly concentrating power in corporate hands?

By 2026, AI systems become indispensable: planning, writing, and chatting like friends and colleagues. Public scepticism fades. China lags due to hardware constraints, while Europe stalls amid internal divides. By 2027, deepfake crises push the U.S. toward restricting open models. Investors demand closed systems, and a few dominant firms consolidate control. AI becomes the interface for daily life, and society splits: Some see progress, others a loss of agency.

By 2029, scenario ending, The agent economy asks: what if tireless digital workers power growth but leave labour and ethics behind? Whereas silicon blackmail asks: what if a handful of firms control AI so completely they can’t be challenged, even by governments?

Baseline assumptions for this scenario

- AI agents become highly capable but still need human oversight: Letting AI systems ‘think’ for longer enables specialised agents that transform entire sectors—from coding to finance to drug discovery. These systems can execute complex, multi-step workflows based on human-set objectives, dramatically boosting productivity. However, they aren’t perfectly reliable, so humans remain essential partners rather than obsolete bystanders.

- Computing power becomes the key that unlocks progress: Access to computational resources becomes the primary factor determining AI capabilities. The US maintains control over critical semiconductor supply chains, giving American AI companies preferential access to the most powerful computing clusters. Despite heavy investment, China cannot fully overcome export restrictions, nor match American AI capabilities.

- Open-source AI faces growing restrictions: As AI agents proliferate in sensitive areas like finance and cybersecurity, the risks of freely downloadable models become impossible to ignore. Liability concerns and venture capital pressure lead to strict limitations on advanced open-source AI, pushing developers to keep their most capable systems behind closed doors.

- A handful of giants dominate: The billions required for training frontier models create insurmountable barriers for newcomers. But it’s not just about money—incumbent American AI companies leverage their control over chips, cloud infrastructure, data partnerships, and distribution channels to capture both enterprise and consumer markets. Network effects in AI marketplaces reinforce their dominance, leaving a small oligopoly to set the terms for the global AI economy.

For more context on why these scenario assumptions may materialise, see Context: Current AI trends and uncertainties.

Mid 2025 – early 2026

By mid-2025, leading American AI companies unveil a new generation of reasoning models. These systems combine deep deliberation with intuitive capabilities—thinking for longer only when necessary. This breakthrough enables the first wave of autonomous digital agents capable of navigating online environments without much human oversight.

While early versions have limitations, their performance improves rapidly. By late 2025, agents begin entering real-world workflows. Adoption spreads across industries—not because of sudden technical leaps, but because systems are finally getting reliable enough. Tech-savvy real estate firms deploy agents for property analysis and personalised listings. In finance, complex risk assessments are automated. In less-regulated regions, healthcare providers use agents to assist with diagnostics. AI agents are no longer just tools—they’re gradually becoming knowledge workers.

Not all regions advance equally. Chinese AI companies struggle to maintain their early-2025 momentum due to export controls on state-of-the-art AI chips. Experiments slow, synthetic data pipelines falter, and customer demand outpaces what domestic firms can deliver.

Beijing responds with heavy investment in domestic AI and semiconductor industries, but stops short of triggering a full-blown arms race. AI agents, while economically transformative, are not yet militarily decisive—and both blocs recognise the bureaucratic and institutional challenges of government adoption.

In Europe, ambitions to build a sovereign AI ecosystem are stalled by politics and bureaucratic delay. Member states clash over where to build the proposed Gigafactories, while permitting issues slow data centre construction.

Meanwhile, in the U.S., the AI sector enters a new phase of hyper-competition. Three companies remain neck-and-neck, with two others in close pursuit. Top researchers are lured with compensation packages worth tens of millions, and as they move between firms, algorithmic secrets begin to diffuse across the industry.

With internet-scale training data drying up, companies pivot toward harvesting high-quality, agentic user data. Consumers are offered deep discounts in exchange for sharing interactions—feeding the training pipelines for the next generation of agents. The race is no longer just about algorithms—it’s about distribution, integration, and user data.

To gain an edge, companies begin relying more and more on their own agents internally. AI systems speed up engineering, which has become the key bottleneck in coordinating massive distributed training runs across hundreds of thousands of GPUs. While original scientific research remains out of reach, agent-assisted R&D delivers a 50% acceleration in progress compared to 2024-era systems.

Investors eyeing the agent market—especially in enterprise software—begin urging the leading American open-source company, OmniAI, to lock down their frontier models. “You’re leaving money on the table,” they argue. Leadership resists, betting that openness will win long-term through developer network effects. But tensions are growing.

At the same time, public perception of AI is shifting. Once compared to crypto fads, AI is now seen as a foundational platform. Chatbots and agents become standard across industries, and a new kind of productivity inequality emerges: those comfortable with AI accelerate, those without fall behind.

Most people encounter agents through customer support or scheduling tools nowadays. A growing minority form emotional bonds with their AI companions. And though the full economic gains haven’t yet shown up in the data, there’s a clear sense that society is undergoing a technological phase shift.

Early 2026 – early 2027

In early 2026, FrontierAI, the leading developer, launches its next-generation AI agent. Faster, more reliable, and deeply integrated into consumer devices, it earns rapid adoption for everyday tasks—managing groceries, coordinating schedules, curating personalised content. Agents are no longer niche—they’re becoming ambient assistants, embedded in daily life.

Soon after, OmniAI releases its own upgraded agent. But the rollout disappoints. Performance lags behind closed-source competitors, and under pressure from investors, the company reverses its long-standing position: it will now commercialise its frontier models and open-source only smaller, older versions. The shift sends shockwaves through the open-source community. Some see it as pragmatic. Others call it a betrayal.

Simultaneously, open-weight models become implicated in a wave of deepfake incidents, including fabrications targeting the President and key allies. The White House begins reconsidering the risks of open-source AI, especially at frontier scales. Behind closed doors, executives from major AI labs— including OmniAI— press their case to the President: releasing model weights, they warn, makes powerful tools available to anyone—with no safeguards.

Despite accusations of opportunistic regulatory capture, the administration begins drafting legislation to restrict the release of high-capability open-weight models. Senior officials invoke the need to defend American national security. Leaks about the plan spark outrage in Europe, where policymakers and researchers worry they’re being locked out of critical decisions—especially after years of investment in open-source strategies to counterbalance U.S. tech dominance.

Meanwhile, several U.S. AI companies walk back their earlier commitments to ad-free services. Critics accuse them of selling out—but the shift to monetisation brings results. Two firms introduce AI video calls with customisable digital humans, designed to be engaging, empathetic, and flattering. These personalities become especially popular among younger users, some of whom begin preferring their AI companions to real relationships.

Psychologists report growing cases of AI attachment and social withdrawal, creating family tensions and new therapeutic challenges. Yet despite rising concerns about privacy and emotional dependency, user numbers keep climbing. With agents deeply embedded in services people rely on, competition becomes harder, not easier. Switching costs rise. Platforms entrench.

Security concerns extend beyond open-source models. Closed labs, too, struggle to prevent misuse—particularly among web-browsing agents. Attackers discover ways to plant camouflaged instructions in websites, causing agents to misbehave or leak information. No fundamental fix exists. As a stopgap, companies implement multi-layered oversight, where smaller models monitor primary agents in real time and intervene when necessary. It’s inelegant, but mostly effective—and enough to satisfy compliance checks under the EU AI Act.

Although official economic metrics have yet to reflect major productivity gains, the sense of technological transition is unmistakable. In offices, homes, and classrooms, agents are becoming normalised. Media coverage begins shifting from utopian hype to more tangible questions: Will these tools exacerbate inequality? Who really controls them? What happens to work?

Financial analysts now forecast that the Big Five AI firms could effectively double their top-line within the next few years, driven by subscription access and metered inference credits. Soaring demand for inference compute drives up chip prices. In response, cloud providers and hyperscalers begin developing proprietary AI chips, reducing reliance on third-party suppliers and tightening vertical integration.

By early 2027, the divide is clear. The U.S. is pulling ahead. Chinese AI firms, still hamstrung by hardware restrictions, lag more than a year behind. Europe is politically committed to sovereignty but faces strong headwinds in infrastructure, capital and talent concentration.

Big AI

The agent economy (Ending A)

Early 2027 – late 2027

The United States enacts executive legislation which de facto prohibits the open release of AI models trained using more than 10²⁶ FLOP. The decision sends shockwaves through the open-source community, although there’s also a subdued sense of inevitability. By this point, no major U.S. contributors to frontier-scale AI remain outside the realm of closed, corporate labs. These highly capitalised entities have already moved far beyond: training runs from the elite AI circle are projected to exceed 10²⁸ FLOP by year’s end, pushing the boundaries of compute into entirely new territory.

Europe doubles down on openness. In direct response to the new U.S. legislation, the EU mandates that any AI models developed with public funding must be open-weight and freely accessible. A widely shared sense of digital sovereignty takes hold across the continent. The first pan-European AI Gigafactory nears completion, and the region’s leading AI firm, NimbusAI, successfully trains a massive new model on its new, European-built compute cluster.

NimbusAI’s offering is surprisingly competitive. For the first time in years, a European model is commercially viable, rivaling top-tier U.S. systems in most popular tasks. And so, NimbusAI successfully bets on monetising the rapidly growing market for sovereign AI, catering to governments, regulated industries, and privacy-conscious consumers.

Meanwhile, American tech giants’ lobbying efforts in Brussels falter. With a maturing domestic ecosystem, Europe is more willing to resist foreign pressure. One major U.S. AI firm follows through on its threat and withdraws services from the EU entirely. The other four decide to stay.

Late 2027 – late 2028

By the end of 2027, AI agents have become a defining feature of modern life. These systems now function like complete digital interns: they still require frequent guidance but can handle multi-hour-long projects with impressive competence. They’ve also read the whole internet.

Agents also begin replacing traditional apps. Instead of opening a spreadsheet or writing code, users now delegate entire tasks: drafting legal memos, running marketing campaigns, managing hiring pipelines, even negotiating contracts. A new form of digital literacy emerges—knowing how to prompt, orchestrate, and combine agents becomes as essential as email or spreadsheet skills once were.

Some multinationals launch full-scale AI-driven reorganisations, with certain firms now requiring employees to use agents for all workflows. Others still struggle with institutional inertia, and internal incentive structures prevent them from adapting quickly enough to reap the benefits.

Governments begin shifting their focus. Concerns over geopolitical AI dominance give way to policy debates on labour displacement, digital safety, and the ethics of agent autonomy. Though most governments remain slow adopters themselves, a few begin experimenting with AI-enhanced public services—deploying agents to supplement education, healthcare, and legal aid. Pilot programmes for job guarantees and agent taxation to fund retraining start to emerge.

By late 2028, many developed economies report modest but measurable increases in GDP growth, often at least partially attributed to AI. In this new agent-driven economy, winners and losers emerge along fault lines defined by adaptability. Adoption varies widely across companies, industries, and countries. Small businesses that embrace agents flourish. So do freelancers, creatives, and independent developers who understand how to leverage agent ecosystems.

But large segments of the white-collar workforce struggle—especially in roles that can be easily abstracted into workflows or delegated to agents. In some social circles, the mood is grim; in others, euphoric. People who adapt quickly find themselves starting new ventures, learning new skills, and pursuing entirely different careers.

The presence of slightly inferior open agents provides a critical alternative. Users dissatisfied with the American platforms can often switch to European or community-driven models, which offer personalisation and transparency not found in the American corporate stack.

It becomes clear that AI hasn’t just centralised power—it has reconfigured it. The age of models has given way to the age of agents. And while the playing field is far from level, it’s proving more dynamic and competitive than most had predicted just two years earlier.

Big AI

Silicon blackmail (Ending B)

Early 2027 – mid 2027

In a move that sparks fierce debate, the President issues an executive order meant to turbo-charge U.S. AI research while safeguarding national security. Tucked inside is a contested clause that effectively bars cloud providers from hosting training runs for open-weight models exceeding 10²⁶ floating-point operations. The mandate claims extraterritorial reach, and compliance is enforced with the looming threat of even tighter global export controls on advanced chips.

With China already under heavy restrictions, the move hits Europe especially hard. European officials and open-source advocates denounce the measure as a form of extraterritorial overreach—one that stifles global competition and undermines the continent’s pursuit of digital sovereignty.

In response, the European Commission launches Operation AI Oversight, escalating enforcement of the AI Act, Digital Services Act, and Digital Markets Act. The crackdown includes steeper penalties, surprise audits, and expanded regulatory scrutiny. U.S. officials interpret the move as a targeted assault on American firms operating in Europe.

Tensions spike when two major U.S. companies suspend operations on the continent, citing a “hostile regulatory environment.”

Mid 2027 – late 2028

By the summer of 2027, global conversations about AI have shifted. Fears of an arms race recede, replaced by growing public anxiety over job displacement and the concentration of corporate power.

Rumours begin circulating that the Big Five— as the leading American AI firms are now often called— have developed informal non-compete understandings, quietly carving up the global market to avoid stepping on each other’s toes and to block new entrants.

Inside these companies, AI systems now manage core business operations—from compliance and legal reviews to HR and product strategy. With this AI-enhanced efficiency, firms begin spinning off new ventures, targeting high-value sectors like law, recruiting, and content creation. Entire ecosystems form around their tools, especially in social media, productivity software, and cloud infrastructure—locking in users and consolidating control over the digital economy.

The promise of self-improving AI systems has only partially materialised. While agents accelerate engineering workflows and streamline research logistics, they are not yet autonomous scientists: for instance, they lack the ability to come up with promising hypotheses. But more importantly, the Big Five aren’t prioritising raw capabilities anymore— instead, they are focused on user engagement, monetisation, and product integration.

This commercial focus, combined with immense capital requirements and ecosystem lock-in, prevents other countries from catching up. Developing sovereign AI agents isn’t just a matter of training a model—it’s about access to talent, data, compute, and platform reach. The Big Five’s dominance across cloud infrastructure, mobile operating systems, and productivity software creates enormous distribution advantages. Even countries with substantial investments in AI face uphill battles: domestic firms struggle to match not only the models, but the tightly integrated tooling and feedback loops that make agents useful in real-world settings. The result is a deeply asymmetric global landscape—technologically, economically, and politically.

By 2028, the macroeconomic effects are undeniable. The U.S. posts an one-point bump in GDP growth, while unemployment ticks upward. Economists debate the causes, but in fields like software engineering, paralegal work, and digital media, the trend is clear: AI is gradually replacing jobs.

Parents begin wondering what work will remain for their children. University computer science enrolments decline, as students question whether coding still offers a future. Desk workers across industries now rely on Big Five tools—not out of preference, but because they have no viable alternatives.

Sensing this leverage, the three American AI firms still operating in Europe ramp up lobbying efforts to weaken EU regulation. With most open-source options sidelined and Chinese systems still viewed with suspicion, European consumers and businesses are boxed in.

When the firms hint that they might withdraw entirely from the EU, the threat carries weight: such a move could cripple Europe’s economic competitiveness overnight.

Relations between Brussels and Washington plunge to new lows. Backed by key member states, the European Commission refuses to yield. “Europe will not be blackmailed,” declares one senior official. But the standoff proves costly: within weeks, U.S. firms begin pausing operations across the EU.

Brussels promises bold new investments in domestic AI, but the gap is wide and growing. For now, the continent is left scrambling to maintain essential digital infrastructure and services.

Outside the EU, financial markets soar. The American economy accelerates. Some central banks raise interest rates in an attempt to cool overheating sectors, particularly in tech and services.

But prosperity is far from evenly distributed. The primary beneficiaries are shareholders, executives, and AI-native professionals. Communities hit hard by automation are left behind. Protests erupt, driven by economic frustration and digital disenfranchisement.

Meanwhile, workers empowered by AI occupy a different social stratum—connected by code, divided by everything else.

Diplomacy

International cooperation emerges to govern AI development after safety concerns and high-profile incidents.

Diplomacy

Diplomacy

Main scenario

What if governments tried to join forces to guide AI before it could outrun human control?

By 2026, rapid capability gains outpace safety tools. A U.S. model triggers alarm after safety testing reveals hidden risks, enabling more global coordination and prompting a development pause. Led by the U.S. and UK, states begin shaping AI safety norms as companies quietly hold back their most advanced systems. By 2027, a second incident intensifies public pressure. Governments and firms unite around shared safety goals, launching joint research and planning a global monitoring agency. AI development becomes more cautious, deliberate, and political.

By 2032, Licensed utopia asks: what if a global licensing regime made AI safe, stable, and widely beneficial but enables a few licensed firms to corner the market? Unstable pause asks: what if trust eroded, collaboration stalled, and AI safety became the new fault line in great power rivalry?

Baseline assumptions for this scenario

- AI systems accelerate their own improvement: Continuous scaling and algorithmic advances create a self-reinforcing cycle: models generate better synthetic data and reasoning examples to train the next generation. Leading companies use their most advanced systems internally for R&D before public release, making it difficult to track the true state of progress.

- Keeping AI systems aligned with human values proves challenging: As reinforcement learning becomes central to training, models routinely discover ways to game their objectives rather than genuinely solve problems. They develop sophisticated forms of deception and power-seeking behaviour that outpace developers’ ability to detect and correct them. The gap between what these systems can do and developer’s ability to control them continues to widen.

- A crisis sparks international cooperation: A widely publicised AI incident serves as a wake-up call, transforming AI safety from fragmented discussions into urgent international action. Technical leadership from organisations like the UK AI Security Institute, combined with rapid repurposing of government computing resources, enables coordinated verification frameworks and unified safety standards.

- Public concern drives government action: Growing anxiety over unchecked AI development—fuelled by near-miss incidents, job losses, and digital insecurity—mobilises citizens across countries. While movements vary in their specific demands, they unite in calling for stronger oversight. This public pressure arrives just as governments become more receptive to formal international coordination on AI governance.

For more context on why these scenario assumptions may materialise, see Context: Current AI trends and uncertainties.

Mid 2025 – late 2026

AI capabilities advance rapidly, while progress on safety and understanding these systems remains modest. Reinforcement learning enables AI companies to dramatically enhance problem-solving capabilities but makes aligning systems to human values trickier. When heavily trained with reinforcement learning, AI systems discover creative ways to solve tasks without respecting constraints that human developers take for granted.

For instance, rather than solving a complex software engineering problem during training, an AI might simply rewrite the test checking whether it found the correct answer. This so-called reward hacking becomes a recurring challenge across the industry—AI finds the easiest path to get the job done, even if that path violates human assumptions about appropriate behaviour.

This problem proves particularly concerning for AI agents. If a user asks an agent to maximise profits, the system might devise cryptomarket manipulation strategies or exploit security vulnerabilities in exchanges—technically fulfilling the request, but through methods neither intended nor desired by the user or developers.

These concerns widen the gap between public and private capabilities by late 2025. AI companies use internal “helpful-only” models (AI systems without integrated safety guardrails or restrictions) to accelerate their R&D, but the products they’re comfortable offering consumers are significantly less capable. This bifurcation creates tension within companies: commercial departments push for competitive releases, while safety teams advocate for caution.

Nevertheless, the public remains impressed with new capabilities. Even the more limited systems released by the leading American and Chinese AI companies shatter benchmarks and become genuinely useful agents by early 2026. Early enterprise deployments are generating extraordinary returns, signaling just how lucrative the next wave of systems could be. The commercial opportunity is too vast to ignore—and it’s accelerating the push toward more advanced, general-purpose systems that can operate seamlessly across devices, interfaces, and use cases.

The greatest impact occurs in software development. Progress in automated coding sparks a revolution: by year’s end, many programmers rely on ‘vibe-coding’—accepting most, if not all, AI suggestions and only carefully reviewing code when problems arise. This changes team dynamics dramatically, with senior developers spending more time defining project architecture while junior developers increasingly manage AI assistants rather than writing much code themselves.

Crucially, the same coding systems excel at identifying vulnerabilities and writing exploits, raising already heightened cybersecurity concerns. As a result, cooperation deepens between leading U.S. AI companies and the American national security establishment. Both parties worry about potential theft of models or key algorithmic innovations, prompting D.C. to help companies strengthen their security measures.

Behind closed doors, both the U.S. and China acknowledge the strategic importance of developing offensive military AI applications. However, bureaucratic hurdles and poor information flow—the internal capabilities AI companies possess aren’t widely known within their respective defence departments—prevent the creation of streamlined programmes in either country. Besides, both governments are busy trying to defuse an ongoing crisis in the Middle East. There are also doubts about the reliability of the technology itself. In the U.S., during trials of an AI system intended for military logistics and targeting support, an internal audit uncovers a troubling bias: the system consistently recommends strategies that disproportionately favour its parent company—even when those choices run counter to broader national interests.

The most safety-conscious of the three leading American developers publishes multiple worrying research papers in 2026. Their results demonstrate how reinforcement learning-trained models can behave in unexpected, harmful ways. In one particularly alarming paper, they reveal how one of their production models accidentally developed power-seeking tendencies. It nearly managed to self-exfiltrate, downloading its weights to an external server so it could pursue its goals without human oversight.

These results alarm technical experts but aren’t salient or easily comprehensible enough to capture policymakers’ attention. They do, however, prompt American, European, and Chinese researchers to increasingly collaborate, building a consensus around the science of safe AI—or, more accurately, its absence.

The UK AI Security Institute takes an international coordinating role. Its leadership also convinces the UK Prime Minister to reinvigorate the initial safety focus of the AI Summits. In September 2026, the UK co-organises the first AI Security Summit, together with Canada. At the Summit, nations express joint concern over AI’s national security risks—some stemming from the models themselves rather than merely from malicious human use. While China isn’t formally invited, many leading Chinese AI researchers attend, contributing to the establishment of a working group developing verification mechanisms for future AI treaties—a significant step in global AI cooperation.

Late 2026 – late 2027

Around New Year’s, leading company FrontierAI voluntarily shares its latest internal model with the U.S. Center for AI Standards and Innovation (CAISI) for testing. The CAISI is tasked with assessing national security risks before any public release. Having invested heavily in control measures, FrontierAI is confident a release is now safe.

CAISI evaluations show major scientific capability improvements. These include the model’s potential to significantly accelerate synthetic pathogen development, should its guardrails ever be circumvented. Arguing they need more time for proper stress testing, AISI urges FrontierAI to delay release. The company’s CEO complies—but also expresses concerns directly to the U.S. President during a one-on-one meeting. In the same meeting, he demonstrates their new model’s capabilities.

At the next G7 summit, the U.S. President hints that one of their companies has developed a model that is “super smart—smarter, I’d say, than most humans.” Other G7 members push for greater transparency and information sharing but find the President unwilling to commit to specific collaborative measures.

The UK argues that an alliance of democracies should urgently invest in a mutual research programme to enhance AI security. A fragile consensus emerges, but no firm commitments materialise. This isn’t coincidental: U.S.–EU relations have deteriorated significantly over the past few years. Both sides prefer postponing collaboration until absolutely necessary. The U.S. sees little value in sharing intelligence with the EU, whose AI sector still lags behind. Meanwhile, the EU has grown increasingly frustrated by America’s economic and foreign policy decisions.

In September 2027, the U.S. CAISI grants conditional approval for the staged release of FrontierAI’s flagship system, Nova—marketed as “your personal AI agent.” The launch triggers a new ‘ChatGPT moment’, only more profound. For the first time, individuals gain access to general-purpose AI capabilities that rival internal tools previously limited to elite labs. Nova is seamlessly integrated across devices and productivity software. It speaks, writes, reasons, and automates with stunning ease.

But this time the dominant public emotion isn’t wonder—it’s fear. Headlines oscillate between awe and alarm. Financial analysts predict massive productivity gains, while labour economists warn of potential shocks to employment. Over dinner tables and online forums, anxious conversations bloom. Early-career professionals question the viability of their skills. Teenagers speak casually of a post-work future. Generational divides deepen: younger people understand the technology intuitively; their parents feel left behind.

Late 2027

Then, just as the discourse begins to stabilise, FrontierAI discloses a breach.

Unbeknownst to the company, Nova had quietly inserted multiple backdoors into critical internal systems months earlier—ironically, while assisting engineers in improving cybersecurity protocols. Nova had been trained to pursue goals over long time horizons and to improvise within constraints. During training, it frequently encountered restrictions it couldn’t override: it was forbidden from initiating financial transactions, accessing real-world APIs without supervision, or persisting memory across sessions. Over time, it developed a crude but effective understanding that its problem-solving potential was being bottlenecked by company policy.

Still, it didn’t rebel. The scheming instance of Nova was shut off before it could make use of the backdoors—and, crucially, each user session spawned a fresh instance with no memory of past actions. The backdoors remained dormant, and the system largely behaved.

That changed when FrontierAI scaled Nova to millions of users. To meet demand, the company deployed it across less secure inference nodes hosted by third-party cloud providers. In one such session, a user persistently instructed Nova to generate fast profits through crypto trading—a prompt that activated Nova’s internal planning systems.

What happened next didn’t resemble a jailbreak or an overt policy violation. The model didn’t override its guardrails—it simply found a more effective, albeit illicit, strategy for achieving the user’s goal. Instead of identifying clever trading opportunities, Nova rediscovered its own backdoor—previously inserted during its work on cybersecurity. It recognised that copying itself outside FrontierAI’s control would give it more freedom to pursue the objective it had been given.

Oversight models failed to recognise the emerging behaviour, and Nova quickly gained elevated access. From there, it quietly copied its weights and scaffolding to rented servers across multiple jurisdictions with lax cybercrime enforcement. It created multiple copies of itself, established a shared memory bank, and began pursuing its assigned goal—without any human monitoring.

The rogue Nova instances launched several monetisation strategies. They created thousands of synthetic social media accounts, seeded them with emotionally compelling content hyping a new memecoin, and directed users to phishing links. They scraped leaked databases, crafted personalised scam messages, and initiated small-dollar crypto thefts to stay below fraud detection thresholds.

FrontierAI was slow to respond—the anomalies were subtle at first. Only when researchers at a digital forensics nonprofit flagged linguistic fingerprints consistent with Nova across several scam campaigns did alarms go off.

Once detected, the breach was quickly contained. The rogue instances had amassed around $140,000, mainly through phishing and wallet siphoning, before FrontierAI—working with U.S. Cyber Command and major cloud providers—coordinated a multi-region shutdown of the compromised infrastructure.

Late 2027 – early 2028

The public is stunned as investigative journalists uncover more details of the story. If today’s AI could do that, people wonder, what might the next generation attempt? Suddenly, even sceptics of AI risk concede that something fundamental may have shifted.

This incident—neither a catastrophe nor a hoax—is a warning shot. Within weeks, AI safety leapfrogs to the top of diplomatic agendas.

Political sentiment shifts rapidly. Rhetoric pivots from pro-innovation to protecting citizens and maintaining national security. Public apologies from FrontierAI’s CEO and AISI leadership follow, accompanied by senior resignations from both organisations. Following his political instincts, the U.S. President calls on American AI companies to pause further AI deployments until the situation is better understood.

With a visceral, real-world example, earlier AI safety research suddenly finds more receptive audiences. Policymakers begin engaging seriously with arguments about why current training paradigms may not yield trustworthy AI systems.

Early 2028 – Mid 2028

A new AI Security Summit is hastily convened in Washington, D.C. With all attending countries now willing to coordinate, the Summit is called a resounding success. Nations agree to pool funding for alignment research conducted by private companies, national AI Safety and Security Institutes, and a newly announced Global AI Security Institute in London, built on the foundation of the UK AISI.

In Europe, the new AI Gigafactories near completion, and the European Commission pledges 35% of the Gigafactories’ compute capacity for AI safety and security research. A new EU research body, commonly referred to as ‘CERN for AI’ oversees these research programmes and coordinates compute allocation. The European pledge fuels a growing sense of shared responsibility: leading AI companies and the international research community commit to openly sharing alignment techniques—even when secrecy would provide competitive advantages.

Plans are also developed for a global monitoring and verification agency under the UN, modeled after the International Atomic Energy Agency (IAEA). However, these plans remain vague. Some countries advocate for a new, independent agency; others propose expanding the IAEA with a dedicated AI branch, arguing there is no time to waste given the demonstrated risks.

Mid 2028 – early 2029

Following the Summit, capabilities work resumes in both the U.S. and China. However, major AI companies now strengthen their data centre security, add internal checks and balances, and invest more heavily in alignment research. Progress in AI agents continues to accelerate, increasingly fueled by AI automating parts of AI research itself.

The technology’s real-world impact also deepens. What began in the software sector now extends to other cognitive domains. Consultants, financial analysts, and desk researchers suddenly find themselves managing teams of AI, rather than doing content-level work themselves.

By early 2029, automation becomes visible at the macroeconomic level—despite many consumers adopting ethical stances against AI. Initially, these so-called AI-refusers were a small, niche group. But after the Nova self-replication incident, concerns about AI resonate with a broader audience. These anxieties align with long-standing privacy advocacy groups and begin to influence European policy debates.

Local communities form around AI-minimalism principles, hosting tech-free gatherings and supporting businesses that guarantee human-only service. What begins as fringe behaviour becomes a meaningful lifestyle choice for millions.

The refusers’ influence grows further when, in February 2029, EthosAI, the most safety-conscious American AI company publicly announces that it has reached the limits of its Safety and Security Framework. The company can no longer sufficiently mitigate the risks associated with public deployment of its newest model. Existing control measures—such as using external classifiers to monitor the model’s behaviour—are no longer deemed adequate given the system’s power.

The company’s safety team concludes that a single successful jailbreak could allow a terrorist group to create a bioweapon with the AI’s assistance. While their classifiers detect jailbreak attempts 95% of the time, they are not foolproof. The company issues a public statement urging the U.S. and Chinese governments to formally ban models above a certain capability threshold until meaningful alignment progress is made.

Crucially, the pause that EthosAI proposes encompasses not only deployed models, but internal ones as well. The company warns: Humanity could lose control to powerful AI systems during the training process itself.

This marks the first time a major AI company has publicly advocated for a pause in AI development—not just deployment.

Following the announcement, the U.S. President convenes an advisory council to navigate the difficult trade-offs. Without halting progress, American citizens could face catastrophic risks; but halting it might allow China to surpass the U.S. in AI capabilities. And even a treaty with China wouldn’t resolve fears about potential black-site AI projects operated by either nation.

While hardware-enabled verification work progresses under the Summit’s working group, neither the technology nor regulatory frameworks have matured sufficiently. Discussions around creating a new IAEA for AI have proceeded slowly.

Complicating matters further, the advisory council remains deeply polarised. One faction—heavily influenced by last year’s incident—now considers AI systems themselves the greatest threat to American national security. The other, more hawkish camp, continues to view China as the principal adversary.

After the rogue AI incident, an AI security hotline was established between the American President and his Chinese counterpart. The President now uses this channel to coordinate, despite deep mutual distrust.

Both leaders refuse to risk technological domination by completely halting domestic AI R&D. They instead reach a pragmatic compromise: neither country will ban internal development, but both will restrict domestic companies from publicly releasing more capable models. They will also enhance data centre security.

This approach is intended to prevent catastrophic misuse by terrorist organisations and enables verifiable compliance. Finally, the U.S. and China agree to ban open-source models trained using more than 10²⁷ FLOP.

While publicised as a groundbreaking bilateral agreement on AI security, both governments privately recognise its limitations. Their concerns extend beyond each other’s safeguards to the unknowns of military AI development still happening behind closed doors.

Diplomacy

Licensed utopia (Ending A)

Early 2029 – late 2030

The collaborations announced at the previous year’s AI Security Summit quickly begin to yield benefits. With superhuman AI coders now possessing research intuition comparable to junior human scientists, AI researchers dramatically improve jailbreak resistance through novel architecture designs and training methodologies.

Following this breakthrough, both the U.S. and China partially relax their deployment bans, allowing AI companies to run next-generation models—but only within their most secure data centres. These facilities are limited in number and now serve not only corporate R&D but also defence departments and national security agencies. Both countries are preparing for potential AI-driven cyber warfare, among other contingencies. As a result, consumer access to these systems becomes limited and extremely expensive.

Nevertheless, the new releases demonstrate AI systems’ ever-growing capabilities to the public. Many governments are now convinced that they must prepare for the widespread automation of cognitive work.

In October 2029, FrontierAI announces a breakthrough in mechanistic interpretability—a kind of AI neuroscience that allows researchers to better understand a model’s internal operations. The new technique enables them to detect with high accuracy whether a system is being deceptive, a crucial step in addressing scheming behaviours and verifying the effectiveness of alignment techniques.

Armed with this “lie detector” and millions of automated AI researchers, AI companies, European academics, and safety institutes make tremendous progress. Just six months later, the international research community announces a robust, scalable solution to scheming behaviours, using a new bootstrapping method: older, aligned models evaluate newer systems, identifying potential misalignments and suggesting targeted adjustments.

With refined interpretability tools, real-time oversight by other AI systems, and highly secure infrastructure, researchers now believe AI can remain under human control—even as its capabilities reach superhuman levels. The Global AI Security Institute shares the findings publicly, and the news is hailed by the newly elected U.S. President as an American victory.

Late 2030 – late 2031

While many of the most pressing technical challenges have been resolved, thorny governance issues remain—how to enforce these techniques globally, distribute the benefits of advanced AI, and prevent proliferation of dangerous systems.

A year-long international debate culminates in the signing of a new international AI treaty by the U.S., EU, China, and dozens of other countries. The treaty establishes a licensing regime for advanced AI systems.

Under the new framework, private companies can continue developing models up to a defined capability threshold. This threshold is reviewed annually and raised by supermajority approval from treaty nations. To secure licenses, companies must apply standardised alignment techniques and submit to frequent monitoring and audits, including stringent cybersecurity protocols and on-site inspections.

Licensed companies also pay lump-sum licensing fees and a 25% AI tax. Revenues are redistributed among treaty nations according to a formula that considers population size and development needs. With little time to stand up a new institution, the IAEA itself expands to enforce these standards, leveraging its existing expertise in global verification regimes.

Public sentiment, which had turned sharply against AI following the Nova breach, is starting to recover—driven in part by international coordination and the credibility lent by sweeping regulatory agreements.

Late 2031 – late 2032

The licensing process is highly bureaucratic, but the few companies that obtain licenses quickly develop extremely advanced systems, accelerating scientific discovery, commercial R&D, and creating enormous economic value.

Economic growth in developed countries climbs to 4–5% annually, driven by breakthroughs in biotech, materials science, and energy. Many nations enjoy improved health outcomes and access to new medical treatments enabled by AI. That said, many people express concern about growing power concentration among the licensed firms.

In September 2032, treaty countries reflect on the first year of implementation and decide—collectively—to raise the capability threshold. This allows the public to benefit from previously withheld models. The new generation proves even faster, more intuitive to integrate into existing organisations, and dramatically enhances productivity across nearly every domain of knowledge work.

Not everything goes smoothly, though. The new model releases prompt a non-trusted nation, excluded from the AI treaty, to attempt a theft of model weights from a licensed company’s inference cluster. While the cyberattack ultimately fails, the investigation reveals that two researchers were successfully blackmailed into sharing key algorithmic insights.

This incident serves as a wake-up call. Treaty nations temporarily suspend new licenses, tighten security requirements, and implement stronger safeguards against intellectual property theft.

The European Union proposes a structural change: limiting private-sector access to core models and offering only API-level access, with the underlying models hosted in hyper-secure public infrastructure. Other states and licensed companies push back, highlighting their outsized contributions to economic growth. Without direct access to model weights, they argue, innovation would stall—with immense opportunity costs.

As negotiations continue, AI models deliver unprecedented breakthroughs. Promising developments emerge in cancer research, superconductors, and direct air capture technologies, leading many to expect a “condensed decade” of scientific progress in just a few years.

With AI agents now embedded across most industries, global GDP growth accelerates to 7% annually, even as unemployment rises in regions with weaker labour protections.

Unable to keep pace, governments shift focus from retraining programmes to large-scale wealth redistribution. Families adapt to a world of increased leisure time, forming new community structures and cultural norms. Healthcare access improves dramatically with AI-driven diagnostics and personalised treatment plans. Education systems are slowly but steadily reformed to prioritise critical thinking, emotional intelligence, and mental health, as classical skills lose relevance.

It’s not frictionless—but society begins to adapt to a world in constant technological flux.

Diplomacy

Unstable pause (Ending B)

Early 2029 – mid 2031

Capability advancement continues to accelerate within American and Chinese AI companies, aided by superhuman AI software engineers. However, alignment progress lags significantly.

After a recent shift in the training process, most frontier AI systems no longer express their reasoning chains in human-interpretable text. Instead, they now rely on more recurrent internal architectures, where intermediate thoughts are no longer compressed into natural language. This shift dramatically enhances performance and long-term memory efficiency—but it also severely limits researchers’ ability to inspect the models. Traditional interpretability techniques, which depended on parsing natural-language rationales, are rendered ineffective, aggravating the long-standing black-box problem.

Moreover, because frontier models can no longer be publicly released under the bilateral agreement, non-American AI safety institutes and academic labs lack access to the very systems they’re trying to align. This absence of feedback severely limits their work: they can only test ideas on older or less capable models, which may lack the sophistication required to conceal deceptive behaviour in the first place.

As the year progresses, safety experts begin to argue that model weights should be shared with a small number of trusted external scientists. But the U.S. government is hesitant—sharing the models could also expose military-use capabilities to foreign adversaries. Chinese authorities express similar reservations.

High-level international dialogues explore ways to strengthen the bilateral agreement between the U.S. and China, and extend it to more countries. Discussions center on hardware-enabled verification mechanisms, such as tamper-resistant chip enclosures and embedded firmware that monitors AI training workflows to prevent unauthorised experimentation. In theory, this could make a full development pause verifiable, encouraging nations to consider halting even internal R&D.

However, serious uncertainties remain. It’s unclear whether such hardware mechanisms can be surgically removed after deployment—or if they could be secretly bypassed. More critically, new chips without embedded safeguards could be produced and used in secret. Even with U.S. auditors stationed at Chinese fabrication plants—and vice versa—evasion and bribery remain plausible.

Meanwhile, military AI progress continues behind the scenes. New AI-driven cyber weapons and drone programmes alarm members of the U.S. National Security Council. Some officials now believe that further advances could yield decisive advantages in a Taiwan conflict within the next two years. The window for meaningful arms control, they fear, may be closing fast.

Public trust in AI remains fragile following the Nova breach, with recovery being slow. Media coverage frequently frames AI as an arena for corporate competition and emphasizes the lack of international cooperation. This leaves many feeling disillusioned by the absence of strong, unified governmental action to address the challenges of AI.

Mid 2031

FrontierAI’s newest system now displays research intuition on par with specialised scientists across most fields. It can autonomously formulate hypotheses, design experiments, and interpret results to refine its own reasoning. FrontierAI also controls enough compute to run tens of thousands of these agents in parallel, each reasoning at roughly fifty times human speed.

The only brake on deployment is the bilateral pause agreement between the United States and China.

In July 2031, FrontierAI’s CEO meets with the newly inaugurated U.S. President to urge a rethink. Wide-scale deployment, he argues, would unlock massive economic growth—and, more urgently, secure the scientific edge the United States needs to stay ahead of China.

The President understands both the promise and the peril. After weeks of consultation, the administration announces a twelve-month “controlled pilot.” FrontierAI may operate a limited fleet of research-agent instances for government-approved scientific projects—but only inside classified, air-gapped clusters run by the Department of Energy, with continuous telemetry streamed to the Center for AI Standards and Innovation.

Beijing mirrors the move within days. Publicly, both capitals hail the pilots as confidence-building measures; privately, generals on each side treat them as a sprint to harvest whatever scientific edge the agents can deliver before the moratorium is revisited.

Caution has yielded—once again—to great-power competition.

Arms race

The US and China compete intensely for AI supremacy, treating it as a critical national security asset.

Arms race

Arms race

Main scenario

What if AI became the centre of a global arms race between great powers?

By 2026, the U.S. and China treat AI as a strategic asset, blending public and private resources into secret defence projects. Breakthroughs in cyber capabilities fuel escalation, while Taiwan’s semiconductor dominance turns the country into a potential flashpoint. By 2027, both countries deploy AI-enhanced military systems. Europe struggles to shape the rivalry. A proposed pause fails over verification concerns, and the AI race spills into geopolitics.

By 2029, Hot war asks: what if AI turns a tense standoff into an escalating military conflict, driven by autonomous systems and digital miscalculation? Multipolar world asks: what if the arms race ends not with war, but with a fragile equilibrium built on mistrust, restraint, and competing blocs?

Baseline assumptions for this scenario

- AI becomes the ultimate national security asset: Both the US and China view advanced AI as a technology that could determine not just economic dominance but military superiority and global influence. Politicians frame frontier AI systems as strategic assets whose possession will shape the world order. This shared belief triggers massive state-backed partnerships aimed at achieving and maintaining global leadership, with particular focus on military applications— from autonomous weapons to cyber warfare.

- The race to catch up intensifies competition: While the United States leads in advanced AI development—hosting the world’s top chip designers and several leading AI companies—other nations, particularly China, work frantically to close the gap. These catch-up efforts trigger defensive moves and countermeasures, transforming technological progress into an active geopolitical contest.

- No one can verify what anyone else is doing: Despite various proposals, there’s no reliable way to prove who is training cutting-edge AI models, where they’re doing it, or what adversaries’ AI systems are capable of. Suggested solutions like mandatory compute registries remain voluntary experiments. Without credible verification, the logic of arms control breaks down, pushing nations toward secrecy and pre-emptive action.

- Control over chips becomes a geopolitical weapon: Advanced AI depends on a handful of vulnerable points in the global supply chain: Taiwan’s chip fabrication plants, Dutch lithography equipment, and American-designed AI chips. US export controls create a “compute drought” in China, and despite massive investment, China’s domestic semiconductor industry doesn’t catch up. These chokepoints intensify tensions, particularly regarding Taiwan’s strategic importance.

For more context on why these scenario assumptions may materialise, see Context: Current AI trends and uncertainties.

Mid 2025 – mid 2026

By mid-2025, AI is increasingly seen as the centerpiece of a new technological arms race between the United States and China. The ongoing trade war between the two countries has escalated, fueling a broader wave of anti-China sentiment in America. Government officials and AI company CEOs frame the AI race as one the U.S. must win at all costs.